Structured Gradient-based Interpretations via Norm-Regularized Adversarial Training

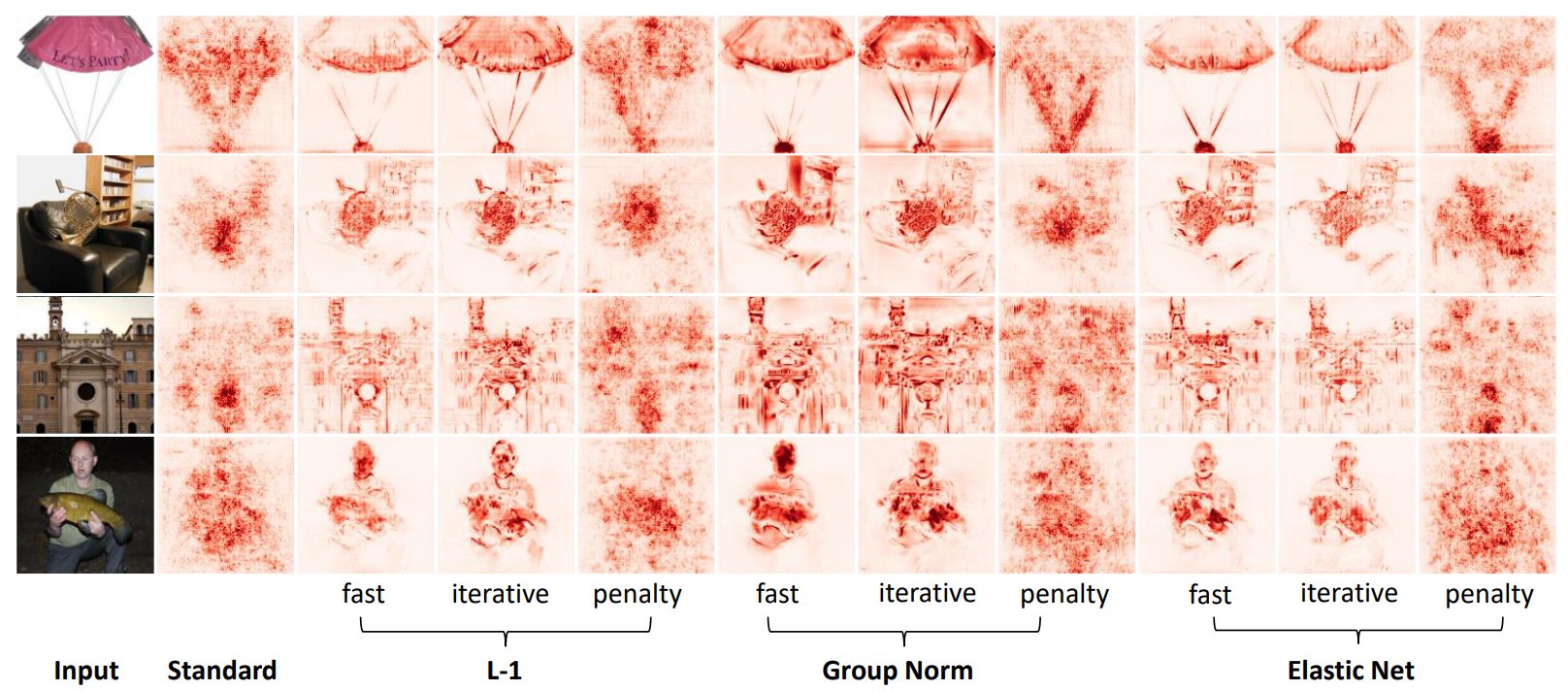

Gradient-based saliency maps have been widely used to explain the decisions of deep neural network classifiers. However, standard gradient-based interpretation maps, including the simple gradient and integrated gradient algorithms, often lack desired structures such as sparsity and connectedness in their application to real-world computer vision models. A frequently used approach to inducing sparsity structures into gradient-based saliency maps is to alter the simple gradient scheme using sparsification or norm-based regularization. A drawback with such post-processing methods is their frequently-observed significant loss in fidelity to the original simple gradient map. In this work, we propose to apply adversarial training as an in-processing scheme to train neural networks with structured simple gradient maps. We show a duality relation between the regularized norms of the adversarial perturbations and gradient-based maps, based on which we design adversarial training loss functions promoting sparsity and group-sparsity properties in simple gradient maps. We present several numerical results to show the influence of our proposed norm-based adversarial training methods on the standard gradient-based maps of standard neural network architectures on benchmark image datasets.

PDF Abstract

CUB-GHA

CUB-GHA