Towards Privacy-Supporting Fall Detection via Deep Unsupervised RGB2Depth Adaptation

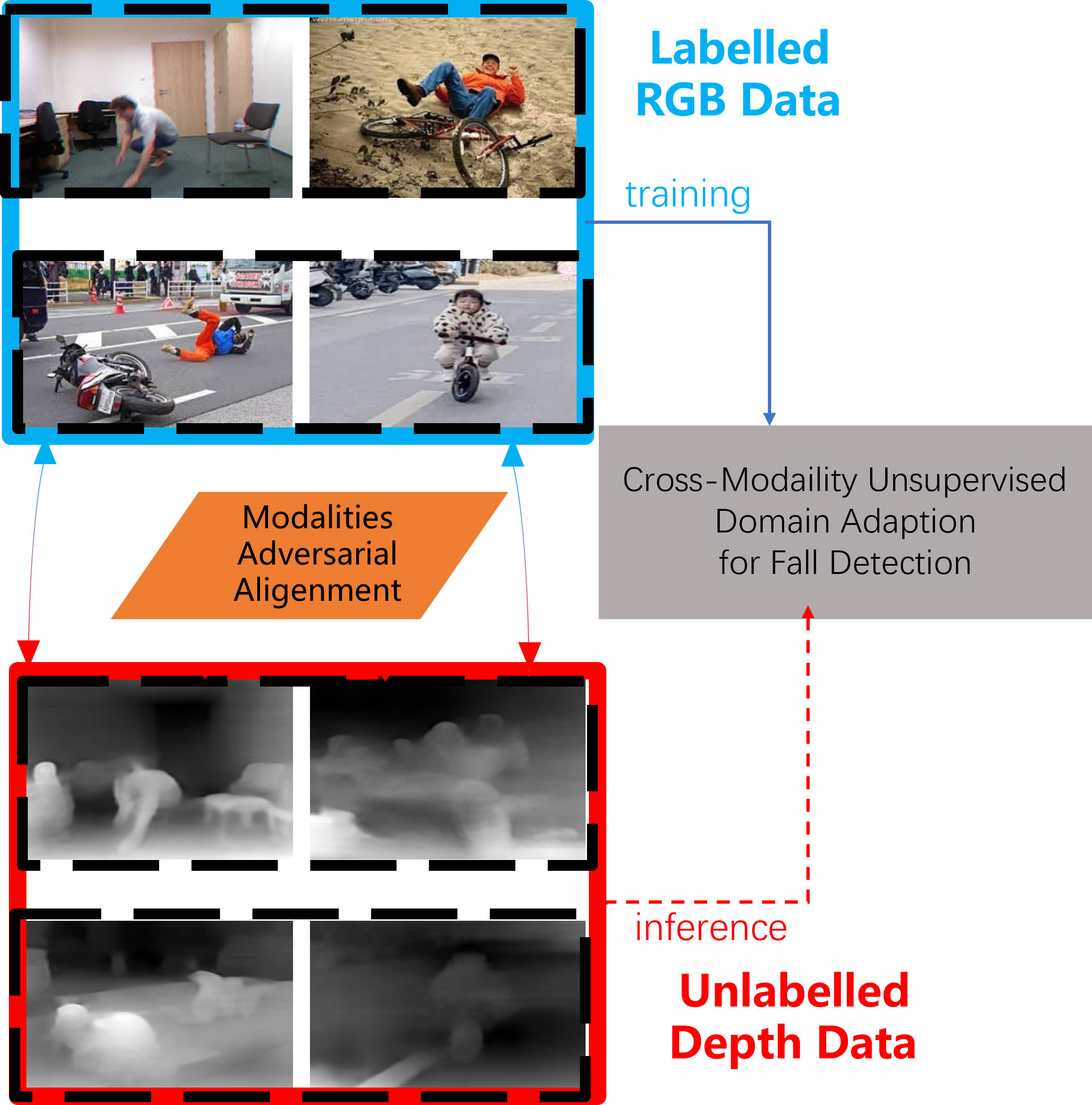

Fall detection is a vital task in health monitoring, as it allows the system to trigger an alert and therefore enabling faster interventions when a person experiences a fall. Although most previous approaches rely on standard RGB video data, such detailed appearance-aware monitoring poses significant privacy concerns. Depth sensors, on the other hand, are better at preserving privacy as they merely capture the distance of objects from the sensor or camera, omitting color and texture information. In this paper, we introduce a privacy-supporting solution that makes the RGB-trained model applicable in depth domain and utilizes depth data at test time for fall detection. To achieve cross-modal fall detection, we present an unsupervised RGB to Depth (RGB2Depth) cross-modal domain adaptation approach that leverages labelled RGB data and unlabelled depth data during training. Our proposed pipeline incorporates an intermediate domain module for feature bridging, modality adversarial loss for modality discrimination, classification loss for pseudo-labeled depth data and labeled source data, triplet loss that considers both source and target domains, and a novel adaptive loss weight adjustment method for improved coordination among various losses. Our approach achieves state-of-the-art results in the unsupervised RGB2Depth domain adaptation task for fall detection. Code is available at https://github.com/1015206533/privacy_supporting_fall_detection.

PDF Abstract

Kinetics

Kinetics

NTU RGB+D

NTU RGB+D

Kinetics-700

Kinetics-700