TR-MISR: Multiimage Super-Resolution Based on Feature Fusion With Transformers

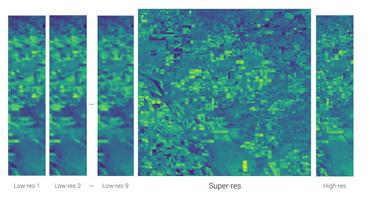

Multiimage super-resolution (MISR), as one of the most promising directions in remote sensing, has become a needy technique in the satellite market. A sequence of images collected by satellites often has plenty of views and a long time span, so integrating multiple low-resolution views into a high-resolution image with details emerges as a challenging problem. However, most MISR methods based on deep learning cannot make full use of multiple images. Their fusion modules are incapable of adapting to an image sequence with weak temporal correlations well. To cope with these problems, we propose a novel end-to-end framework called TR-MISR. It consists of three parts: An encoder based on residual blocks, a transformer-based fusion module, and a decoder based on subpixel convolution. Specifically, by rearranging multiple feature maps into vectors, the fusion module can assign dynamic attention to the same area of different satellite images simultaneously. In addition, TR-MISR adopts an additional learnable embedding vector that fuses these vectors to restore the details to the greatest extent.TR-MISR has successfully applied the transformer to MISR tasks for the first time, notably reducing the difficulty of training the transformer by ignoring the spatial relations of image patches. Extensive experiments performed on the PROBA-V Kelvin dataset demonstrate the superiority of the proposed model that provides an effective method for transformers in other low-level vision tasks.

PDF AbstractCode

Datasets

| Task | Dataset | Model | Metric Name | Metric Value | Global Rank | Benchmark |

|---|---|---|---|---|---|---|

| Multi-Frame Super-Resolution | PROBA-V | TR-MISR | Normalized cPSNR | 0.9300466898779116 | # 1 |

PROBA-V

PROBA-V