Variable Length Embeddings

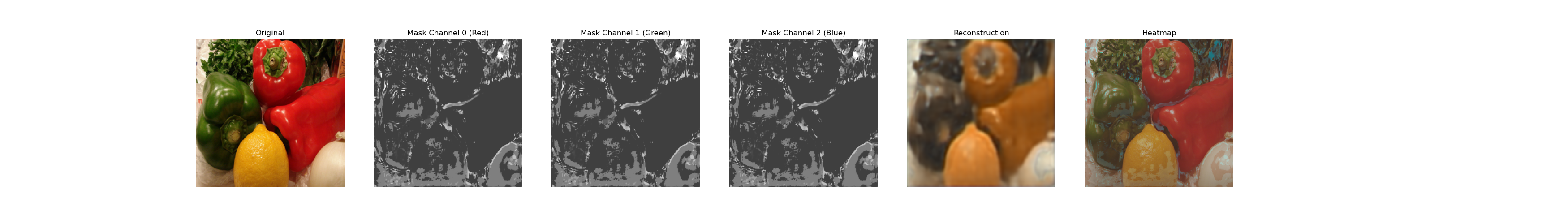

In this work, we introduce a novel deep learning architecture, Variable Length Embeddings (VLEs), an autoregressive model that can produce a latent representation composed of an arbitrary number of tokens. As a proof of concept, we demonstrate the capabilities of VLEs on tasks that involve reconstruction and image decomposition. We evaluate our experiments on a mix of the iNaturalist and ImageNet datasets and find that VLEs achieve comparable reconstruction results to a state of the art VAE, using less than a tenth of the parameters.

PDF AbstractCode

Tasks

Datasets

Results from the Paper

Submit

results from this paper

to get state-of-the-art GitHub badges and help the

community compare results to other papers.

iNaturalist

iNaturalist