Weakly-Supervised Spatio-Temporally Grounding Natural Sentence in Video

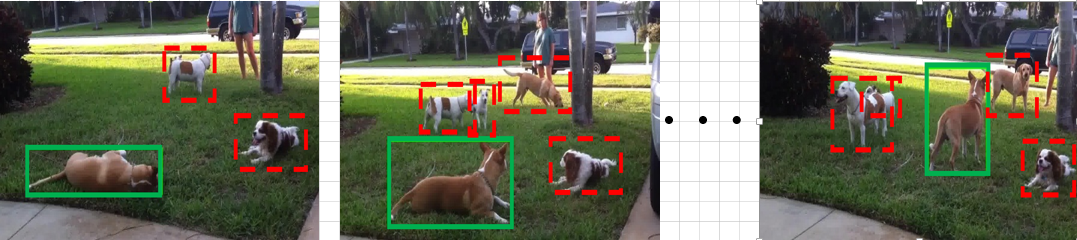

In this paper, we address a novel task, namely weakly-supervised spatio-temporally grounding natural sentence in video. Specifically, given a natural sentence and a video, we localize a spatio-temporal tube in the video that semantically corresponds to the given sentence, with no reliance on any spatio-temporal annotations during training. First, a set of spatio-temporal tubes, referred to as instances, are extracted from the video. We then encode these instances and the sentence using our proposed attentive interactor which can exploit their fine-grained relationships to characterize their matching behaviors. Besides a ranking loss, a novel diversity loss is introduced to train the proposed attentive interactor to strengthen the matching behaviors of reliable instance-sentence pairs and penalize the unreliable ones. Moreover, we also contribute a dataset, called VID-sentence, based on the ImageNet video object detection dataset, to serve as a benchmark for our task. Extensive experimental results demonstrate the superiority of our model over the baseline approaches.

PDF Abstract ACL 2019 PDF ACL 2019 Abstract