When Does Self-supervision Improve Few-shot Learning?

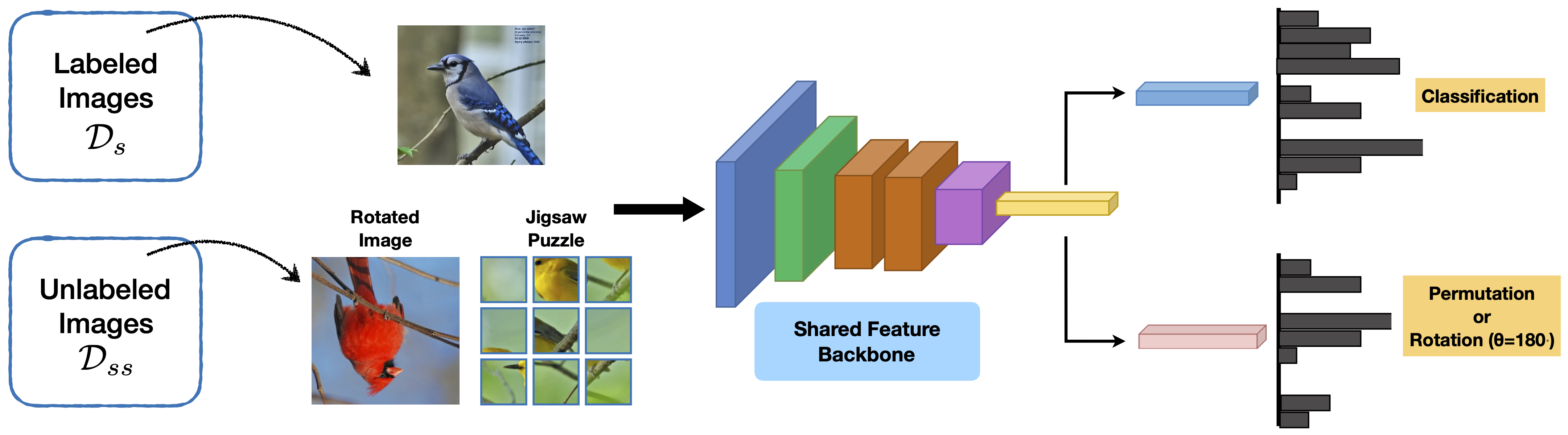

We investigate the role of self-supervised learning (SSL) in the context of few-shot learning. Although recent research has shown the benefits of SSL on large unlabeled datasets, its utility on small datasets is relatively unexplored. We find that SSL reduces the relative error rate of few-shot meta-learners by 4%-27%, even when the datasets are small and only utilizing images within the datasets. The improvements are greater when the training set is smaller or the task is more challenging. Although the benefits of SSL may increase with larger training sets, we observe that SSL can hurt the performance when the distributions of images used for meta-learning and SSL are different. We conduct a systematic study by varying the degree of domain shift and analyzing the performance of several meta-learners on a multitude of domains. Based on this analysis we present a technique that automatically selects images for SSL from a large, generic pool of unlabeled images for a given dataset that provides further improvements.

PDF Abstract ECCV 2020 PDF ECCV 2020 Abstract

tieredImageNet

tieredImageNet