Code Completion

66 papers with code • 4 benchmarks • 9 datasets

Libraries

Use these libraries to find Code Completion models and implementationsDatasets

Most implemented papers

Code Completion with Neural Attention and Pointer Networks

Intelligent code completion has become an essential research task to accelerate modern software development.

Eclipse CDT code analysis and unit testing

The CDT is a plugin which enables development of C/C++ applications in eclipse.

CodeGRU: Context-aware Deep Learning with Gated Recurrent Unit for Source Code Modeling

We evaluate CodeGRU with real-world data set and it shows that CodeGRU outperforms the state-of-the-art language models and help reduce the vocabulary size up to 24. 93\%.

Pythia: AI-assisted Code Completion System

In this paper, we propose a novel end-to-end approach for AI-assisted code completion called Pythia.

Adversarial Robustness for Code

Machine learning and deep learning in particular has been recently used to successfully address many tasks in the domain of code such as finding and fixing bugs, code completion, decompilation, type inference and many others.

LambdaNet: Probabilistic Type Inference using Graph Neural Networks

Given this program abstraction, we then use a graph neural network to propagate information between related type variables and eventually make type predictions.

GraphCodeBERT: Pre-training Code Representations with Data Flow

Instead of taking syntactic-level structure of code like abstract syntax tree (AST), we use data flow in the pre-training stage, which is a semantic-level structure of code that encodes the relation of "where-the-value-comes-from" between variables.

Empirical Study of Transformers for Source Code

In this work, we conduct a thorough empirical study of the capabilities of Transformers to utilize syntactic information in different tasks.

Learning to Execute Programs with Instruction Pointer Attention Graph Neural Networks

More practically, we evaluate these models on the task of learning to execute partial programs, as might arise if using the model as a heuristic function in program synthesis.

On the Embeddings of Variables in Recurrent Neural Networks for Source Code

In this work, we develop dynamic embeddings, a recurrent mechanism that adjusts the learned semantics of the variable when it obtains more information about the variable's role in the program.

CodeXGLUE

CodeXGLUE

PyTorrent

PyTorrent

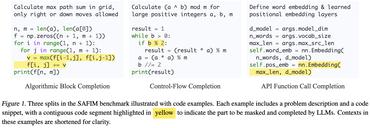

SAFIM

SAFIM