Bidirectional Recurrent Neural Networks

Bidirectional Recurrent Neural Networks

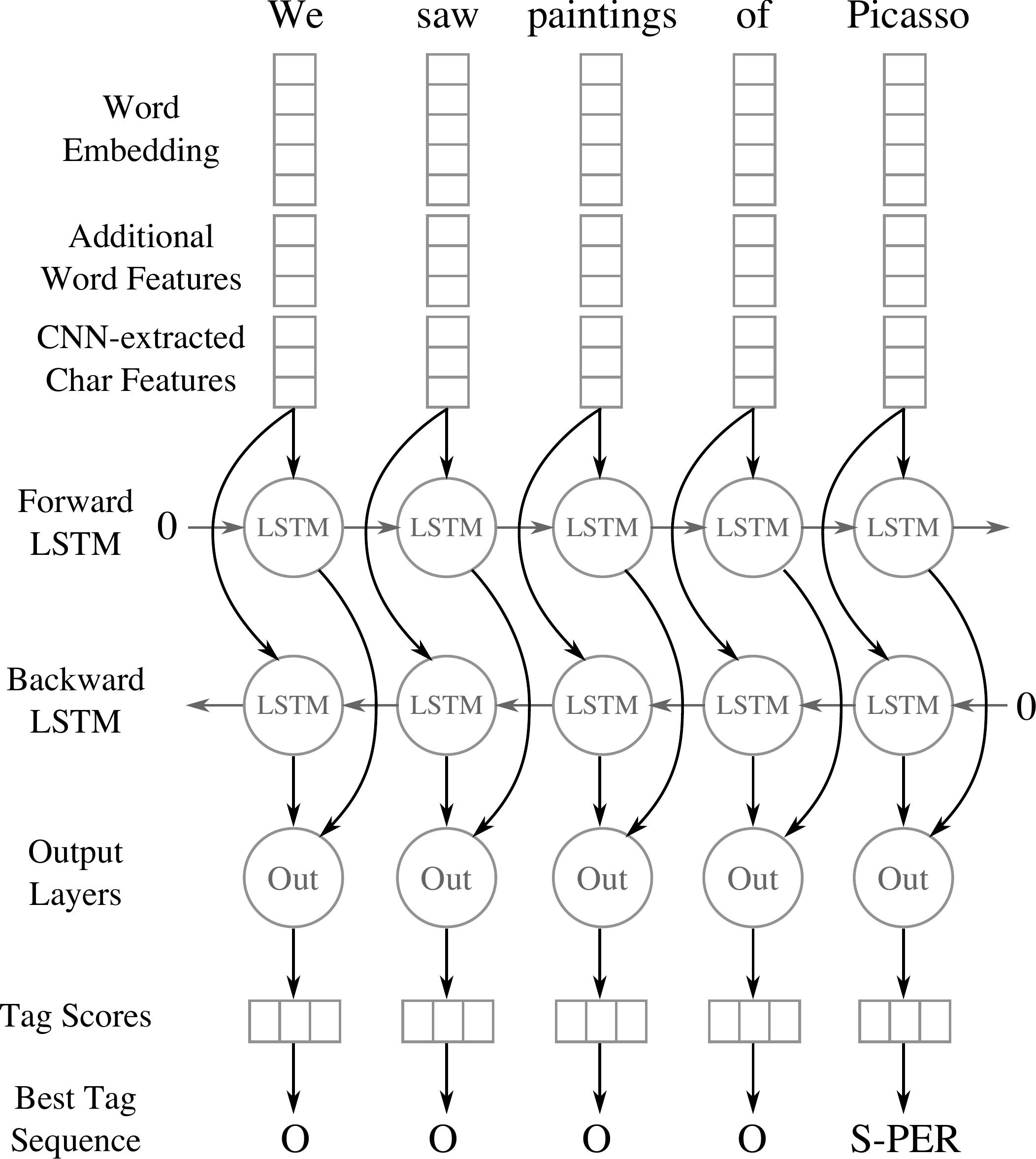

CNN Bidirectional LSTM

Introduced by Chiu et al. in Named Entity Recognition with Bidirectional LSTM-CNNsA CNN BiLSTM is a hybrid bidirectional LSTM and CNN architecture. In the original formulation applied to named entity recognition, it learns both character-level and word-level features. The CNN component is used to induce the character-level features. For each word the model employs a convolution and a max pooling layer to extract a new feature vector from the per-character feature vectors such as character embeddings and (optionally) character type.

Source: Named Entity Recognition with Bidirectional LSTM-CNNsPapers

| Paper | Code | Results | Date | Stars |

|---|

Tasks

| Task | Papers | Share |

|---|---|---|

| Named Entity Recognition (NER) | 6 | 11.54% |

| Sentence | 5 | 9.62% |

| Dependency Parsing | 2 | 3.85% |

| Feature Engineering | 2 | 3.85% |

| Graph Representation Learning | 1 | 1.92% |

| Link Prediction | 1 | 1.92% |

| Language Modelling | 1 | 1.92% |

| Semantic Textual Similarity | 1 | 1.92% |

| Sentence Embedding | 1 | 1.92% |

BiLSTM

BiLSTM

Convolution

Convolution