Attention

Attention

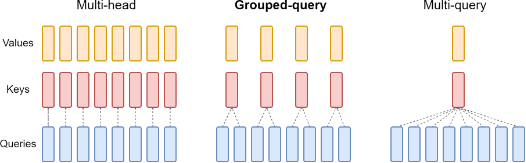

Grouped-query attention

Introduced by Ainslie et al. in GQA: Training Generalized Multi-Query Transformer Models from Multi-Head CheckpointsGrouped-query attention an interpolation of multi-query and multi-head attention that achieves quality close to multi-head at comparable speed to multi-query attention.

Source: GQA: Training Generalized Multi-Query Transformer Models from Multi-Head CheckpointsPapers

| Paper | Code | Results | Date | Stars |

|---|

Tasks

| Task | Papers | Share |

|---|---|---|

| Language Modelling | 4 | 16.00% |

| Question Answering | 3 | 12.00% |

| Arithmetic Reasoning | 2 | 8.00% |

| Code Generation | 2 | 8.00% |

| Math Word Problem Solving | 2 | 8.00% |

| Multi-task Language Understanding | 2 | 8.00% |

| Large Language Model | 1 | 4.00% |

| Text Classification | 1 | 4.00% |

| Text Generation | 1 | 4.00% |

Usage Over Time

Components

| Component | Type |

|

|---|---|---|

Feedforward Network

Feedforward Network

|

Feedforward Networks | |

Scaled Dot-Product Attention

Scaled Dot-Product Attention

|

Attention Mechanisms | |

Softmax

Softmax

|

Output Functions |