Loss Functions

Loss Functions

Normalized Temperature-scaled Cross Entropy Loss

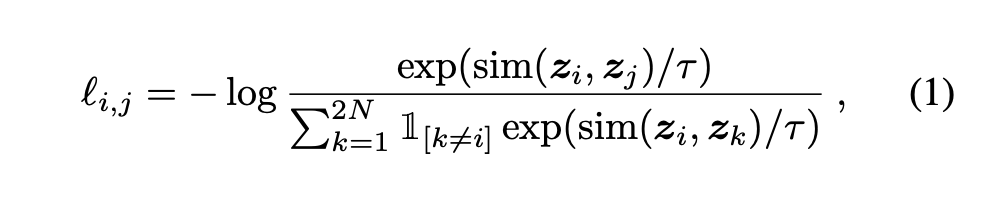

Introduced by Sohn in Improved Deep Metric Learning with Multi-class N-pair Loss ObjectiveNT-Xent, or Normalized Temperature-scaled Cross Entropy Loss, is a loss function. Let $\text{sim}\left(\mathbf{u}, \mathbf{v}\right) = \mathbf{u}^{T}\mathbf{v}/||\mathbf{u}|| ||\mathbf{v}||$ denote the cosine similarity between two vectors $\mathbf{u}$ and $\mathbf{v}$. Then the loss function for a positive pair of examples $\left(i, j\right)$ is :

$$ \mathbb{l}_{i,j} = -\log\frac{\exp\left(\text{sim}\left(\mathbf{z}_{i}, \mathbf{z}_{j}\right)/\tau\right)}{\sum^{2N}_{k=1}\mathcal{1}_{[k\neq{i}]}\exp\left(\text{sim}\left(\mathbf{z}_{i}, \mathbf{z}_{k}\right)/\tau\right)}$$

where $\mathcal{1}_{[k\neq{i}]} \in ${$0, 1$} is an indicator function evaluating to $1$ iff $k\neq{i}$ and $\tau$ denotes a temperature parameter. The final loss is computed across all positive pairs, both $\left(i, j\right)$ and $\left(j, i\right)$, in a mini-batch.

Source: SimCLR

Source: Improved Deep Metric Learning with Multi-class N-pair Loss ObjectivePapers

| Paper | Code | Results | Date | Stars |

|---|

Tasks

| Task | Papers | Share |

|---|---|---|

| Self-Supervised Learning | 101 | 27.98% |

| Image Classification | 17 | 4.71% |

| Retrieval | 11 | 3.05% |

| Semantic Segmentation | 9 | 2.49% |

| Classification | 8 | 2.22% |

| Object Detection | 8 | 2.22% |

| General Classification | 8 | 2.22% |

| Clustering | 7 | 1.94% |

| Activity Recognition | 6 | 1.66% |

Usage Over Time

Components

| Component | Type |

|

|---|---|---|

| 🤖 No Components Found | You can add them if they exist; e.g. Mask R-CNN uses RoIAlign |