Style Transfer Modules

Style Transfer Modules

Revision Network

Introduced by Lin et al. in Drafting and Revision: Laplacian Pyramid Network for Fast High-Quality Artistic Style TransferRevision Network is a style transfer module that aims to revise the rough stylized image via generating residual details image $r_{c s}$, while the final stylized image is generated by combining $r_{c s}$ and rough stylized image $\bar{x}_{c s}$. This procedure ensures that the distribution of global style pattern in $\bar{x}_{c s}$ is properly kept. Meanwhile, learning to revise local style patterns with residual details image is easier for the Revision Network.

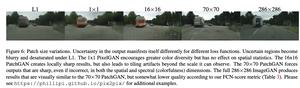

As shown in the Figure, the Revision Network is designed as a simple yet effective encoder-decoder architecture, with only one down-sampling and one up-sampling layer. Further, a patch discriminator is used to help Revision Network to capture fine patch textures under adversarial learning setting. The patch discriminator $D$ is defined following SinGAN, where $D$ owns 5 convolution layers and 32 hidden channels. A relatively shallow $D$ is chosen to (1) avoid overfitting since we only have one style image and (2) control the receptive field to ensure D can only capture local patterns.

Source: Drafting and Revision: Laplacian Pyramid Network for Fast High-Quality Artistic Style TransferPapers

| Paper | Code | Results | Date | Stars |

|---|

PatchGAN

PatchGAN