An Empirical Study of End-to-End Temporal Action Detection

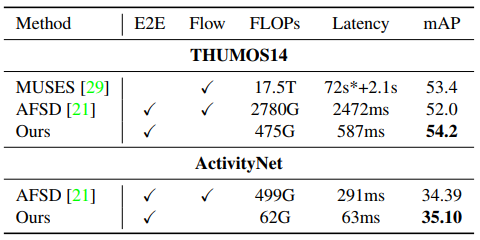

Temporal action detection (TAD) is an important yet challenging task in video understanding. It aims to simultaneously predict the semantic label and the temporal interval of every action instance in an untrimmed video. Rather than end-to-end learning, most existing methods adopt a head-only learning paradigm, where the video encoder is pre-trained for action classification, and only the detection head upon the encoder is optimized for TAD. The effect of end-to-end learning is not systematically evaluated. Besides, there lacks an in-depth study on the efficiency-accuracy trade-off in end-to-end TAD. In this paper, we present an empirical study of end-to-end temporal action detection. We validate the advantage of end-to-end learning over head-only learning and observe up to 11\% performance improvement. Besides, we study the effects of multiple design choices that affect the TAD performance and speed, including detection head, video encoder, and resolution of input videos. Based on the findings, we build a mid-resolution baseline detector, which achieves the state-of-the-art performance of end-to-end methods while running more than 4$\times$ faster. We hope that this paper can serve as a guide for end-to-end learning and inspire future research in this field. Code and models are available at \url{https://github.com/xlliu7/E2E-TAD}.

PDF Abstract CVPR 2022 PDF CVPR 2022 Abstract

ActivityNet

ActivityNet

THUMOS14

THUMOS14

HACS

HACS