Detecting Model Misspecification in Amortized Bayesian Inference with Neural Networks

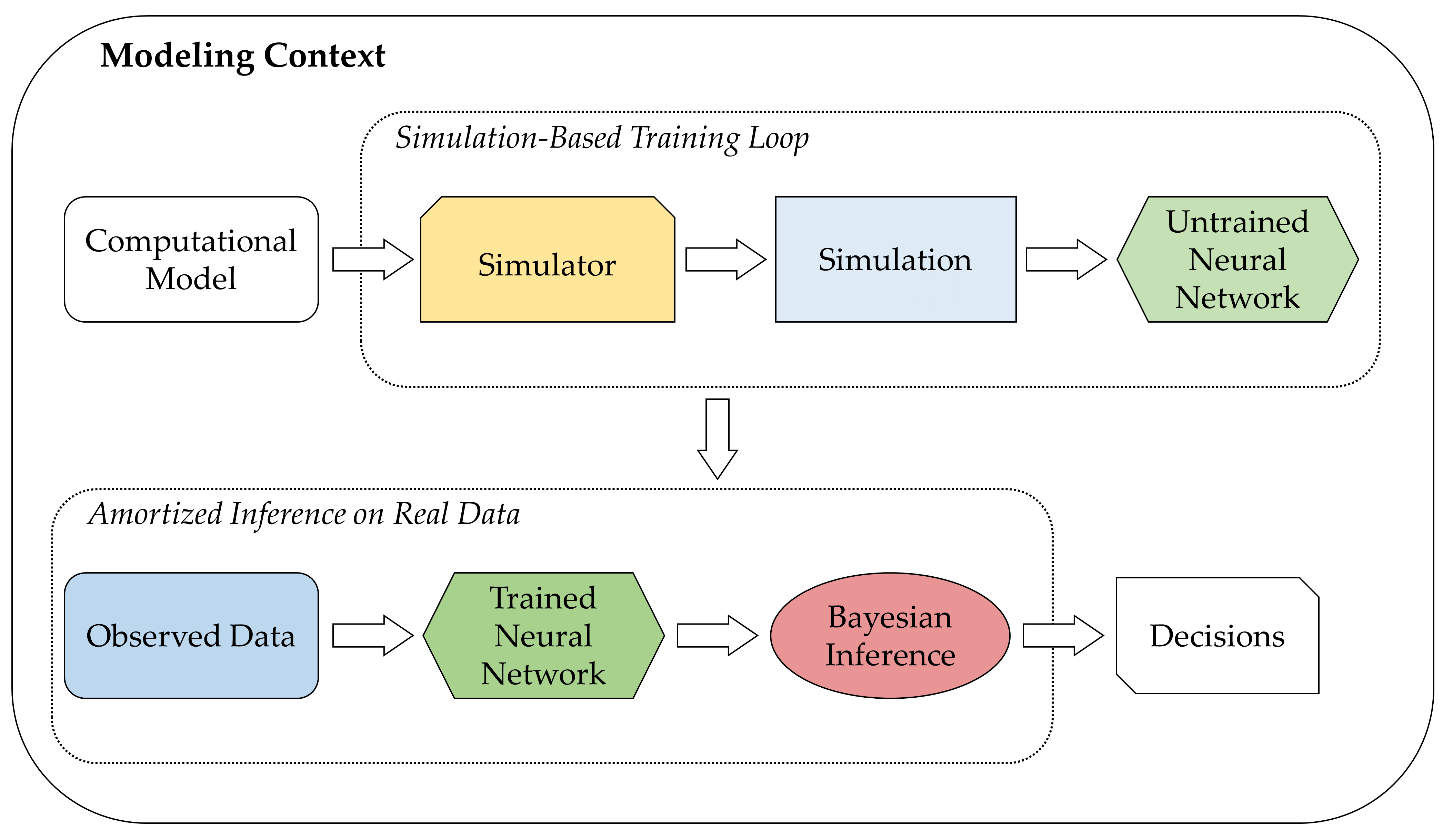

Neural density estimators have proven remarkably powerful in performing efficient simulation-based Bayesian inference in various research domains. In particular, the BayesFlow framework uses a two-step approach to enable amortized parameter estimation in settings where the likelihood function is implicitly defined by a simulation program. But how faithful is such inference when simulations are poor representations of reality? In this paper, we conceptualize the types of model misspecification arising in simulation-based inference and systematically investigate the performance of the BayesFlow framework under these misspecifications. We propose an augmented optimization objective which imposes a probabilistic structure on the latent data space and utilize maximum mean discrepancy (MMD) to detect potentially catastrophic misspecifications during inference undermining the validity of the obtained results. We verify our detection criterion on a number of artificial and realistic misspecifications, ranging from toy conjugate models to complex models of decision making and disease outbreak dynamics applied to real data. Further, we show that posterior inference errors increase as a function of the distance between the true data-generating distribution and the typical set of simulations in the latent summary space. Thus, we demonstrate the dual utility of MMD as a method for detecting model misspecification and as a proxy for verifying the faithfulness of amortized Bayesian inference.

PDF Abstract