Computing Multiple Image Reconstructions with a Single Hypernetwork

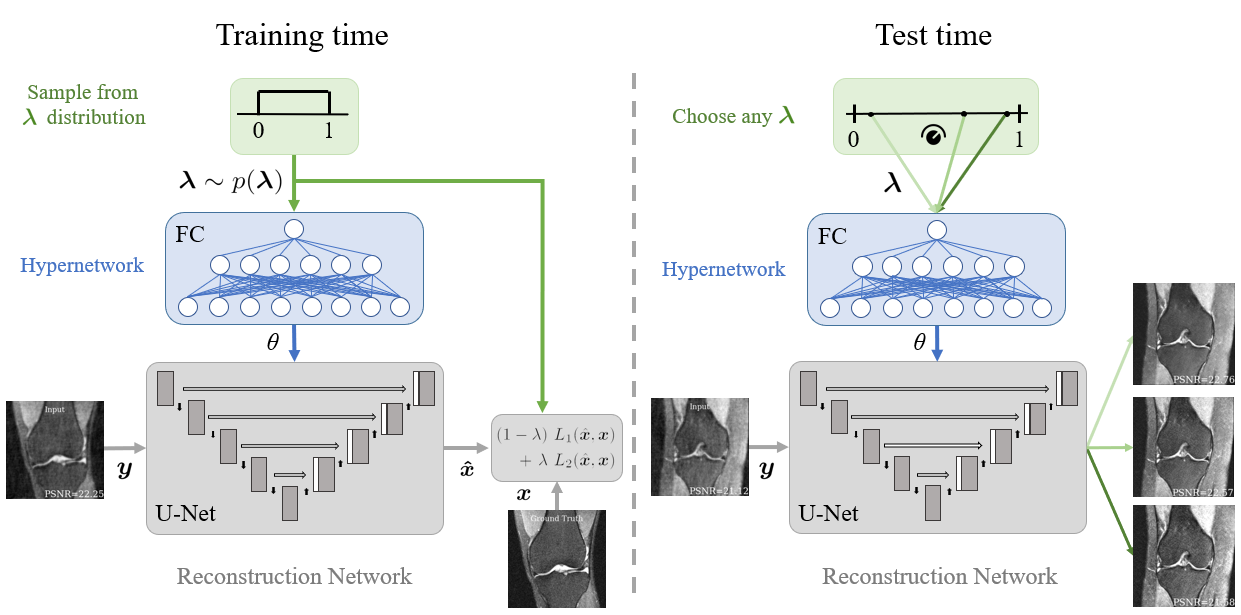

Deep learning based techniques achieve state-of-the-art results in a wide range of image reconstruction tasks like compressed sensing. These methods almost always have hyperparameters, such as the weight coefficients that balance the different terms in the optimized loss function. The typical approach is to train the model for a hyperparameter setting determined with some empirical or theoretical justification. Thus, at inference time, the model can only compute reconstructions corresponding to the pre-determined hyperparameter values. In this work, we present a hypernetwork-based approach, called HyperRecon, to train reconstruction models that are agnostic to hyperparameter settings. At inference time, HyperRecon can efficiently produce diverse reconstructions, which would each correspond to different hyperparameter values. In this framework, the user is empowered to select the most useful output(s) based on their own judgement. We demonstrate our method in compressed sensing, super-resolution and denoising tasks, using two large-scale and publicly-available MRI datasets. Our code is available at https://github.com/alanqrwang/hyperrecon.

PDF Abstract

fastMRI

fastMRI