CondenseNet V2: Sparse Feature Reactivation for Deep Networks

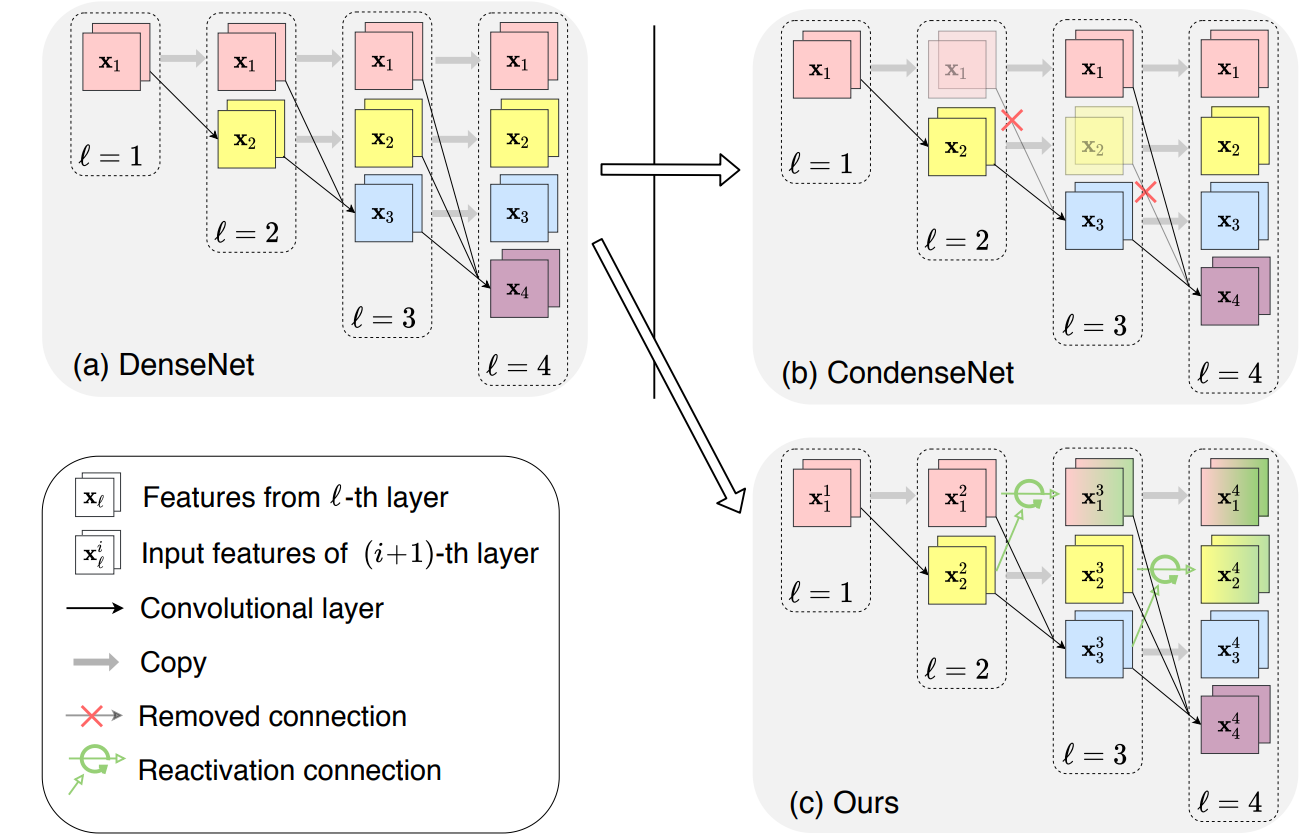

Reusing features in deep networks through dense connectivity is an effective way to achieve high computational efficiency. The recent proposed CondenseNet has shown that this mechanism can be further improved if redundant features are removed. In this paper, we propose an alternative approach named sparse feature reactivation (SFR), aiming at actively increasing the utility of features for reusing. In the proposed network, named CondenseNetV2, each layer can simultaneously learn to 1) selectively reuse a set of most important features from preceding layers; and 2) actively update a set of preceding features to increase their utility for later layers. Our experiments show that the proposed models achieve promising performance on image classification (ImageNet and CIFAR) and object detection (MS COCO) in terms of both theoretical efficiency and practical speed.

PDF Abstract CVPR 2021 PDF CVPR 2021 Abstract

CIFAR-10

CIFAR-10

ImageNet

ImageNet

MS COCO

MS COCO

CIFAR-100

CIFAR-100