CONetV2: Efficient Auto-Channel Size Optimization for CNNs

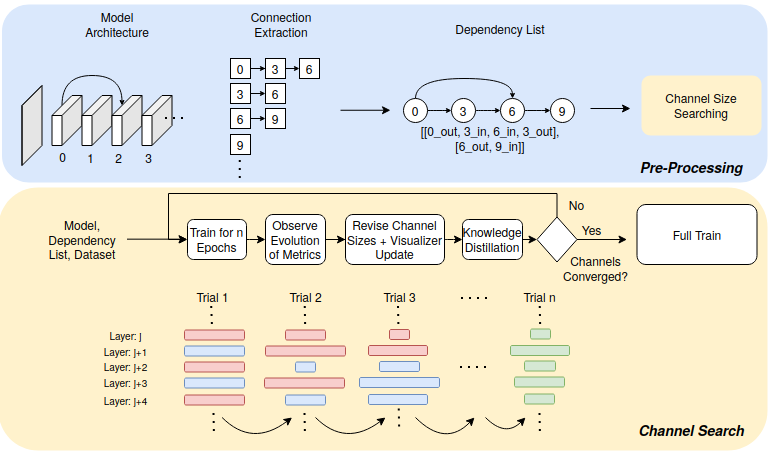

Neural Architecture Search (NAS) has been pivotal in finding optimal network configurations for Convolution Neural Networks (CNNs). While many methods explore NAS from a global search-space perspective, the employed optimization schemes typically require heavy computational resources. This work introduces a method that is efficient in computationally constrained environments by examining the micro-search space of channel size. In tackling channel-size optimization, we design an automated algorithm to extract the dependencies within different connected layers of the network. In addition, we introduce the idea of knowledge distillation, which enables preservation of trained weights, admist trials where the channel sizes are changing. Further, since the standard performance indicators (accuracy, loss) fail to capture the performance of individual network components (providing an overall network evaluation), we introduce a novel metric that highly correlates with test accuracy and enables analysis of individual network layers. Combining dependency extraction, metrics, and knowledge distillation, we introduce an efficient searching algorithm, with simulated annealing inspired stochasticity, and demonstrate its effectiveness in finding optimal architectures that outperform baselines by a large margin.

PDF Abstract

CIFAR-10

CIFAR-10

CIFAR-100

CIFAR-100