Context-Aware Interaction Network for RGB-T Semantic Segmentation

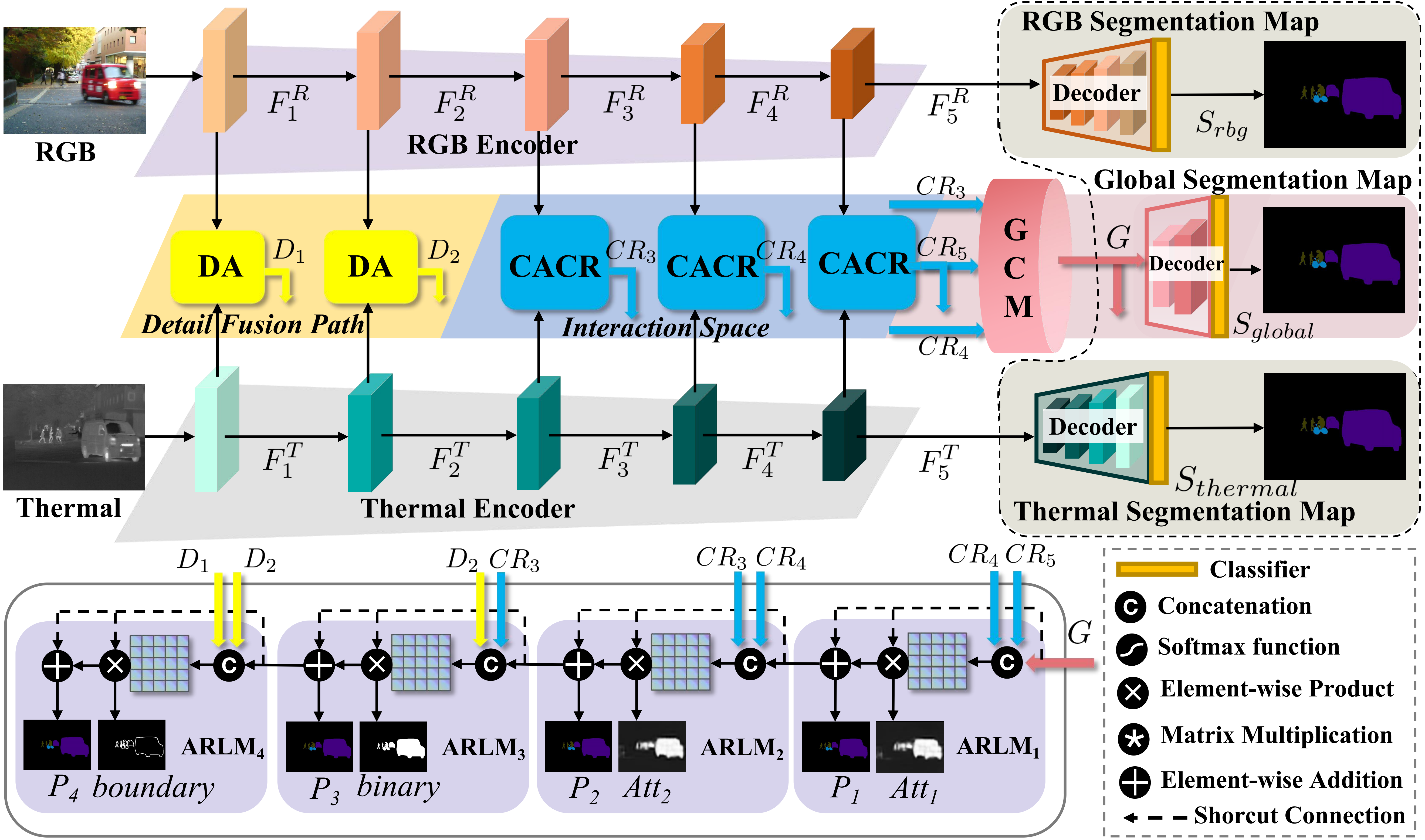

RGB-T semantic segmentation is a key technique for autonomous driving scenes understanding. For the existing RGB-T semantic segmentation methods, however, the effective exploration of the complementary relationship between different modalities is not implemented in the information interaction between multiple levels. To address such an issue, the Context-Aware Interaction Network (CAINet) is proposed for RGB-T semantic segmentation, which constructs interaction space to exploit auxiliary tasks and global context for explicitly guided learning. Specifically, we propose a Context-Aware Complementary Reasoning (CACR) module aimed at establishing the complementary relationship between multimodal features with the long-term context in both spatial and channel dimensions. Further, considering the importance of global contextual and detailed information, we propose the Global Context Modeling (GCM) module and Detail Aggregation (DA) module, and we introduce specific auxiliary supervision to explicitly guide the context interaction and refine the segmentation map. Extensive experiments on two benchmark datasets of MFNet and PST900 demonstrate that the proposed CAINet achieves state-of-the-art performance. The code is available at https://github.com/YingLv1106/CAINet.

PDF AbstractCode

| Task | Dataset | Model | Metric Name | Metric Value | Global Rank | Benchmark |

|---|---|---|---|---|---|---|

| Thermal Image Segmentation | MFN Dataset | CAINet (MobileNet-V2) | mIOU | 58.6% | # 8 | |

| Semantic Segmentation | NYU Depth v2 | CAINet (MobileNet-V2) | Mean IoU | 52.6% | # 29 | |

| Thermal Image Segmentation | PST900 | CAINet (MobileNet-V2) | mIoU | 84.74 | # 7 |

ImageNet

ImageNet

NYUv2

NYUv2

PST900

PST900