Deep Ordinal Regression using Optimal Transport Loss and Unimodal Output Probabilities

It is often desired that ordinal regression models yield unimodal predictions. However, in many recent works this characteristic is either absent, or implemented using soft targets, which do not guarantee unimodal outputs at inference. In addition, we argue that the standard maximum likelihood objective is not suitable for ordinal regression problems, and that optimal transport is better suited for this task, as it naturally captures the order of the classes. In this work, we propose a framework for deep ordinal regression, based on unimodal output distribution and optimal transport loss. Inspired by the well-known Proportional Odds model, we propose to modify its design by using an architectural mechanism which guarantees that the model output distribution will be unimodal. We empirically analyze the different components of our proposed approach and demonstrate their contribution to the performance of the model. Experimental results on eight real-world datasets demonstrate that our proposed approach consistently performs on par with and often better than several recently proposed deep learning approaches for deep ordinal regression with unimodal output probabilities, while having guarantee on the output unimodality. In addition, we demonstrate that proposed approach is less overconfident than current baselines.

PDF Abstract

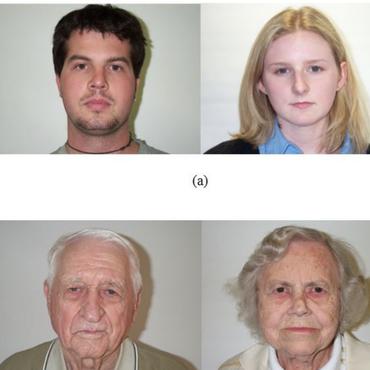

Adience

Adience