DiffBlender: Scalable and Composable Multimodal Text-to-Image Diffusion Models

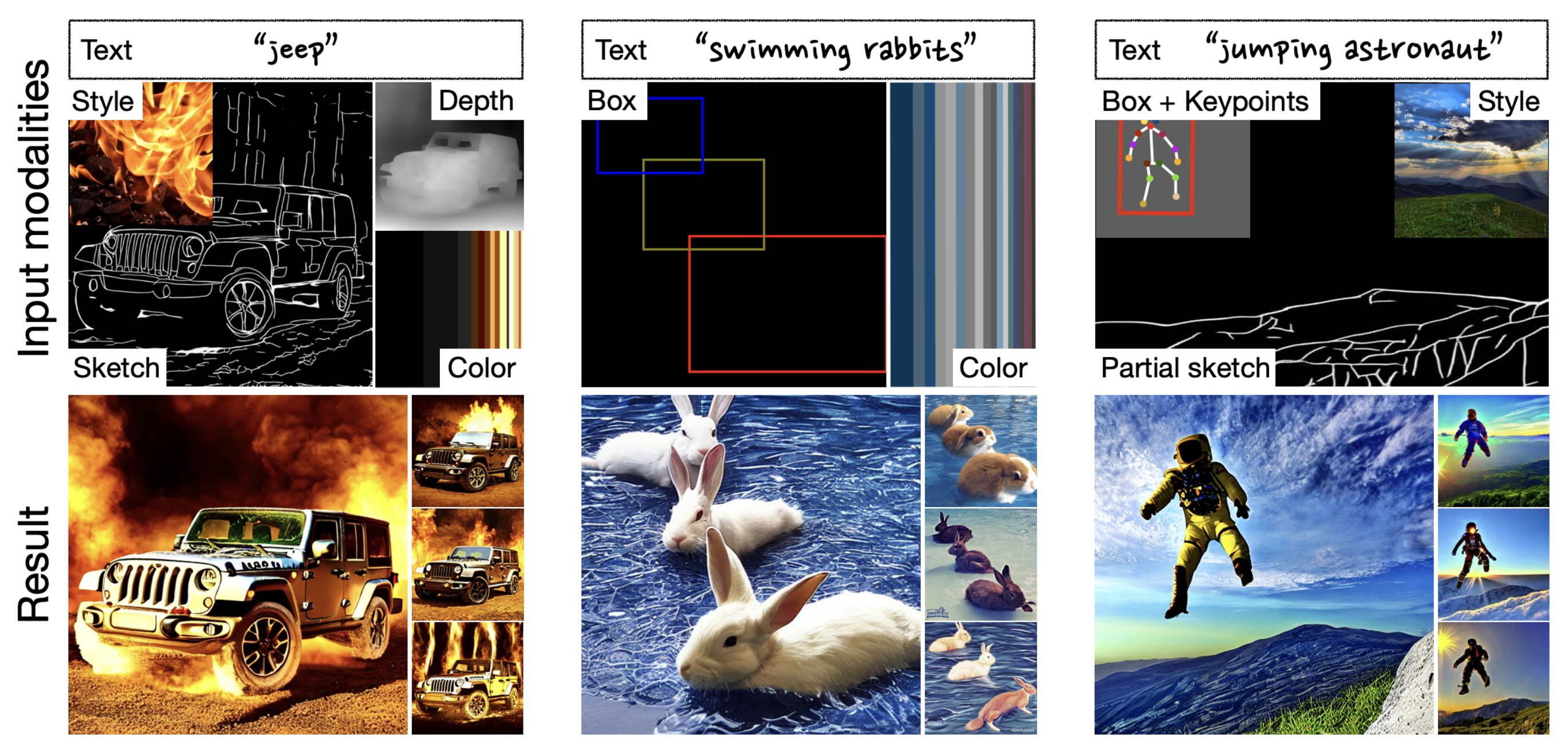

In this study, we aim to extend the capabilities of diffusion-based text-to-image (T2I) generation models by incorporating diverse modalities beyond textual description, such as sketch, box, color palette, and style embedding, within a single model. We thus design a multimodal T2I diffusion model, coined as DiffBlender, by separating the channels of conditions into three types, i.e., image forms, spatial tokens, and non-spatial tokens. The unique architecture of DiffBlender facilitates adding new input modalities, pioneering a scalable framework for conditional image generation. Notably, we achieve this without altering the parameters of the existing generative model, Stable Diffusion, only with updating partial components. Our study establishes new benchmarks in multimodal generation through quantitative and qualitative comparisons with existing conditional generation methods. We demonstrate that DiffBlender faithfully blends all the provided information and showcase its various applications in the detailed image synthesis.

PDF Abstract

MS COCO

MS COCO