Disentangled Non-Local Neural Networks

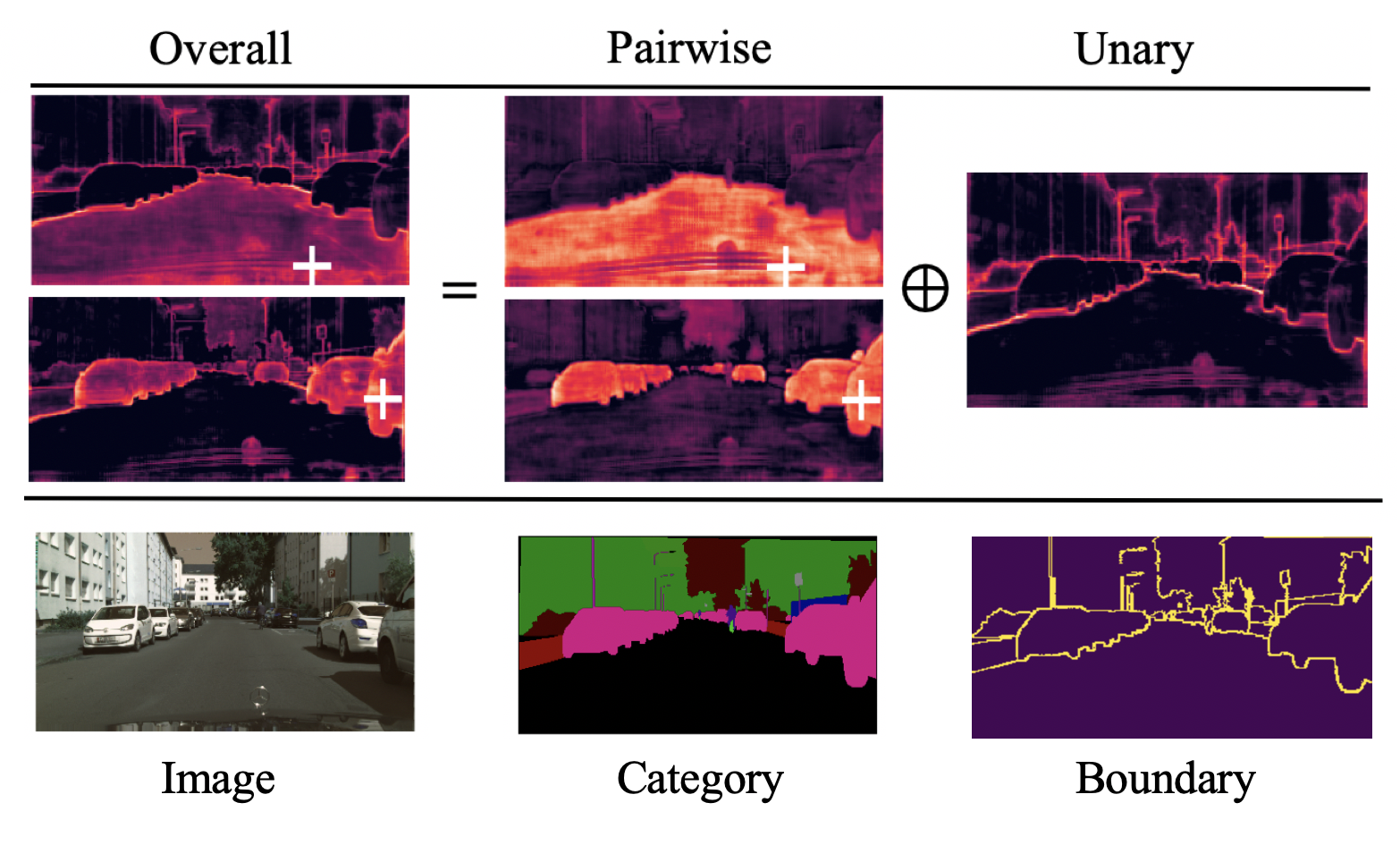

The non-local block is a popular module for strengthening the context modeling ability of a regular convolutional neural network. This paper first studies the non-local block in depth, where we find that its attention computation can be split into two terms, a whitened pairwise term accounting for the relationship between two pixels and a unary term representing the saliency of every pixel. We also observe that the two terms trained alone tend to model different visual clues, e.g. the whitened pairwise term learns within-region relationships while the unary term learns salient boundaries. However, the two terms are tightly coupled in the non-local block, which hinders the learning of each. Based on these findings, we present the disentangled non-local block, where the two terms are decoupled to facilitate learning for both terms. We demonstrate the effectiveness of the decoupled design on various tasks, such as semantic segmentation on Cityscapes, ADE20K and PASCAL Context, object detection on COCO, and action recognition on Kinetics.

PDF Abstract ECCV 2020 PDF ECCV 2020 AbstractCode

Datasets

Results from the Paper

Ranked #20 on

Semantic Segmentation

on Cityscapes test

(using extra training data)

Ranked #20 on

Semantic Segmentation

on Cityscapes test

(using extra training data)

Cityscapes

Cityscapes

Kinetics

Kinetics

ADE20K

ADE20K

PASCAL Context

PASCAL Context

DensePASS

DensePASS

DADA-seg

DADA-seg