Diversified Arbitrary Style Transfer via Deep Feature Perturbation

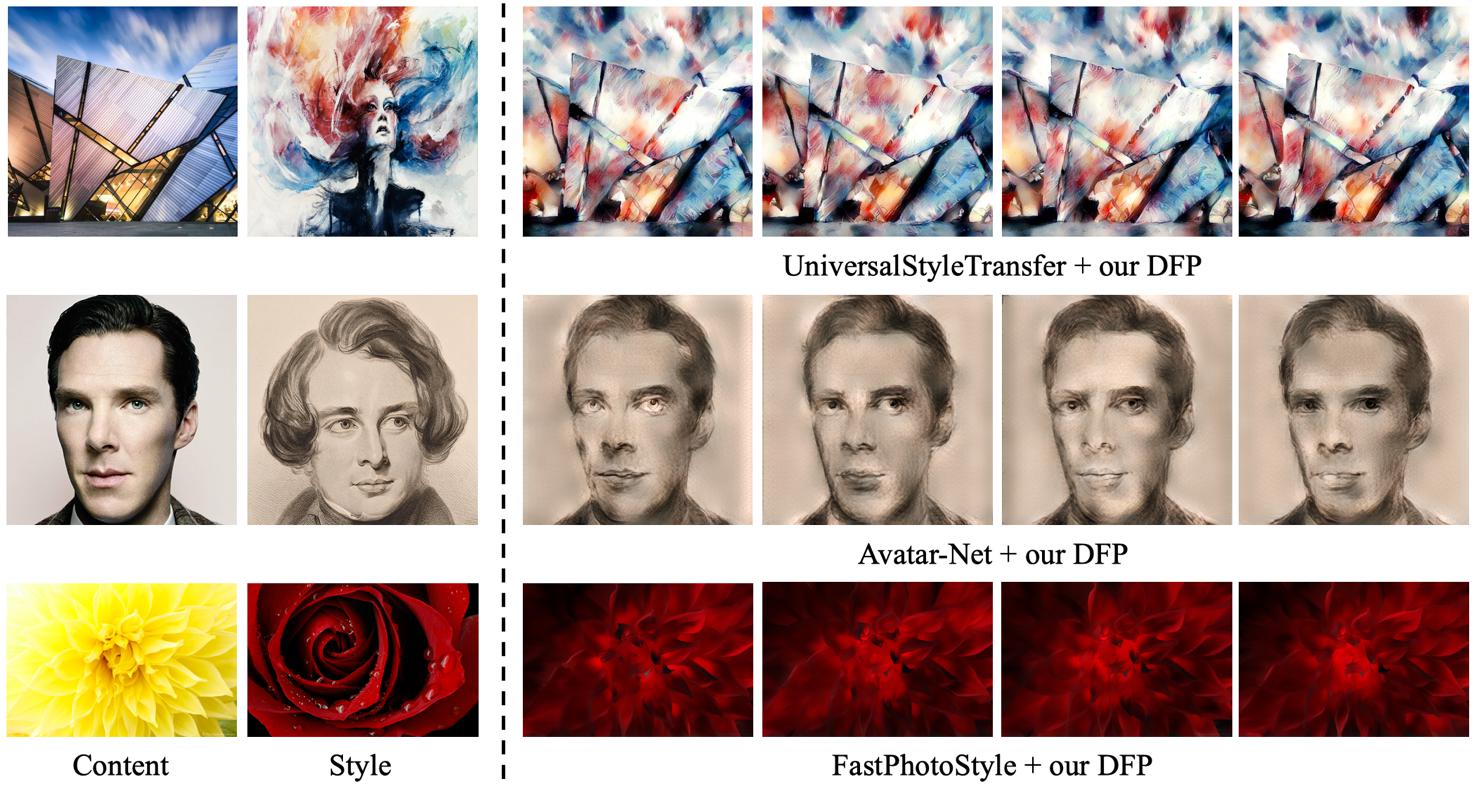

Image style transfer is an underdetermined problem, where a large number of solutions can satisfy the same constraint (the content and style). Although there have been some efforts to improve the diversity of style transfer by introducing an alternative diversity loss, they have restricted generalization, limited diversity and poor scalability. In this paper, we tackle these limitations and propose a simple yet effective method for diversified arbitrary style transfer. The key idea of our method is an operation called deep feature perturbation (DFP), which uses an orthogonal random noise matrix to perturb the deep image feature maps while keeping the original style information unchanged. Our DFP operation can be easily integrated into many existing WCT (whitening and coloring transform)-based methods, and empower them to generate diverse results for arbitrary styles. Experimental results demonstrate that this learning-free and universal method can greatly increase the diversity while maintaining the quality of stylization.

PDF Abstract CVPR 2020 PDF CVPR 2020 Abstract