FairNAS: Rethinking Evaluation Fairness of Weight Sharing Neural Architecture Search

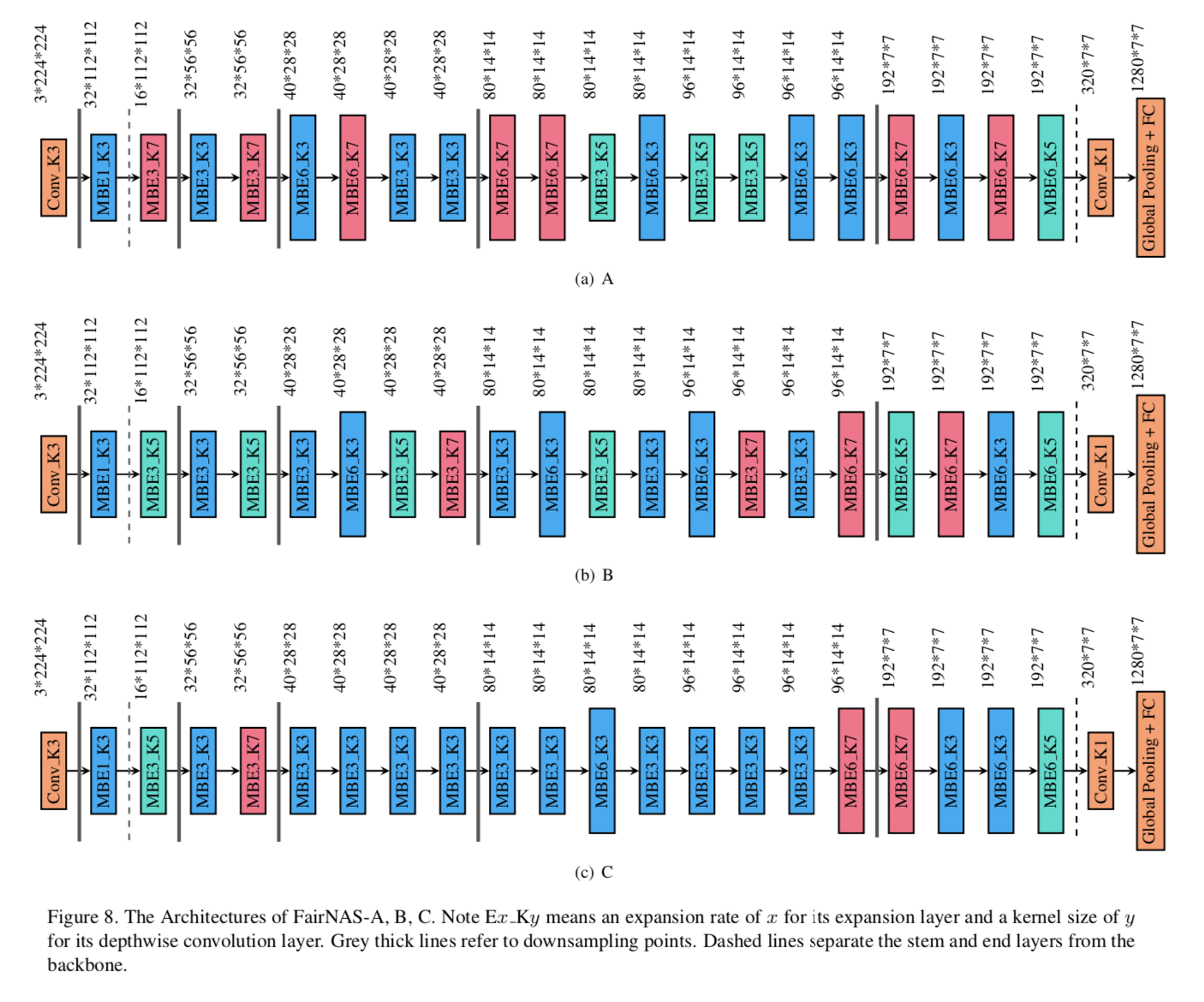

One of the most critical problems in weight-sharing neural architecture search is the evaluation of candidate models within a predefined search space. In practice, a one-shot supernet is trained to serve as an evaluator. A faithful ranking certainly leads to more accurate searching results. However, current methods are prone to making misjudgments. In this paper, we prove that their biased evaluation is due to inherent unfairness in the supernet training. In view of this, we propose two levels of constraints: expectation fairness and strict fairness. Particularly, strict fairness ensures equal optimization opportunities for all choice blocks throughout the training, which neither overestimates nor underestimates their capacity. We demonstrate that this is crucial for improving the confidence of models' ranking. Incorporating the one-shot supernet trained under the proposed fairness constraints with a multi-objective evolutionary search algorithm, we obtain various state-of-the-art models, e.g., FairNAS-A attains 77.5% top-1 validation accuracy on ImageNet. The models and their evaluation codes are made publicly available online http://github.com/fairnas/FairNAS .

PDF Abstract ICCV 2021 PDF ICCV 2021 AbstractCode

Datasets

Results from the Paper

Ranked #3 on

Neural Architecture Search

on CIFAR-10

(using extra training data)

Ranked #3 on

Neural Architecture Search

on CIFAR-10

(using extra training data)

CIFAR-10

CIFAR-10

ImageNet

ImageNet

MS COCO

MS COCO

CIFAR-100

CIFAR-100

NAS-Bench-201

NAS-Bench-201