Hyperparameter-Free Out-of-Distribution Detection Using Softmax of Scaled Cosine Similarity

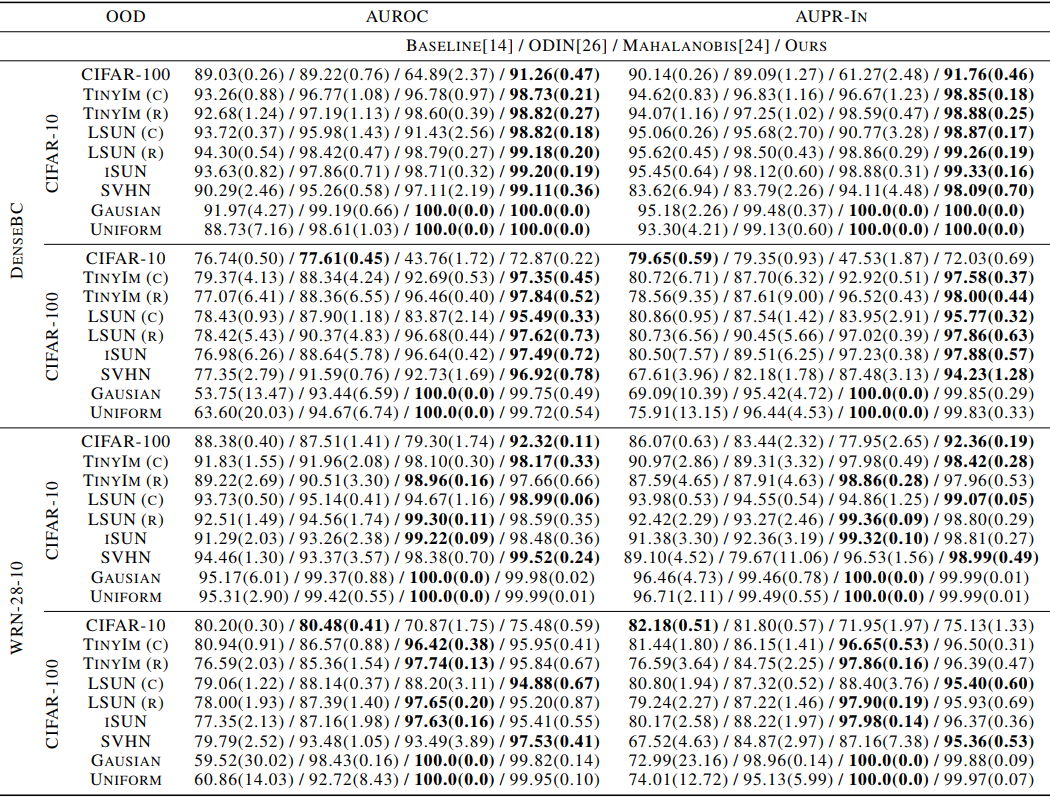

The ability to detect out-of-distribution (OOD) samples is vital to secure the reliability of deep neural networks in real-world applications. Considering the nature of OOD samples, detection methods should not have hyperparameters that need to be tuned depending on incoming OOD samples. However, most of the recently proposed methods do not meet this requirement, leading to compromised performance in real-world applications. In this paper, we propose a simple, hyperparameter-free method based on softmax of scaled cosine similarity. It resembles the approach employed by modern metric learning methods, but it differs in details; the differences are essential to achieve high detection performance. We show through experiments that our method outperforms the existing methods on the evaluation test recently proposed by Shafaei et al., which takes the above issue of hyperparameter dependency into account. We also show that it achieves at least comparable performance to other methods on the conventional test, where their hyperparameters are chosen using explicit OOD samples. Furthermore, it is computationally more efficient than most of the previous methods, since it needs only a single forward pass.

PDF Abstract

ImageNet

ImageNet

MNIST

MNIST

SVHN

SVHN

LSUN

LSUN

Food-101

Food-101

iSUN

iSUN