Implementing efficient balanced networks with mixed-signal spike-based learning circuits

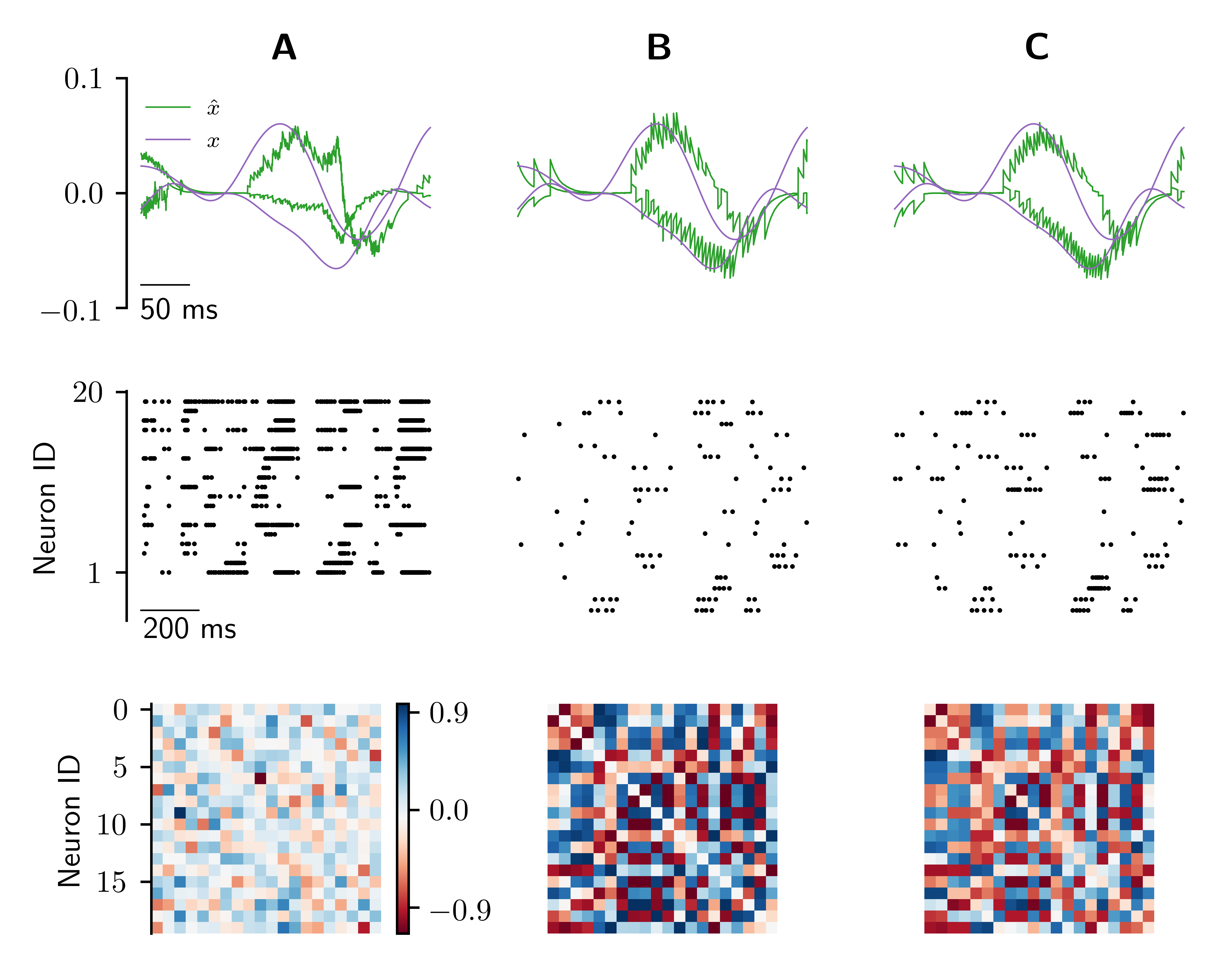

Efficient Balanced Networks (EBNs) are networks of spiking neurons in which excitatory and inhibitory synaptic currents are balanced on a short timescale, leading to desirable coding properties such as high encoding precision, low firing rates, and distributed information representation. It is for these benefits that it would be desirable to implement such networks in low-power neuromorphic processors. However, the degree of device mismatch in analog mixed-signal neuromorphic circuits renders the use of pre-trained EBNs challenging, if not impossible. To overcome this issue, we developed a novel local learning rule suitable for on-chip implementation that drives a randomly connected network of spiking neurons into a tightly balanced regime. Here we present the integrated circuits that implement this rule and demonstrate their expected behaviour in low-level circuit simulations. Our proposed method paves the way towards a system-level implementation of tightly balanced networks on analog mixed-signal neuromorphic hardware. Thanks to their coding properties and sparse activity, neuromorphic electronic EBNs will be ideally suited for extreme-edge computing applications that require low-latency, ultra-low power consumption and which cannot rely on cloud computing for data processing.

PDF Abstract