Improving Knowledge Distillation via Regularizing Feature Norm and Direction

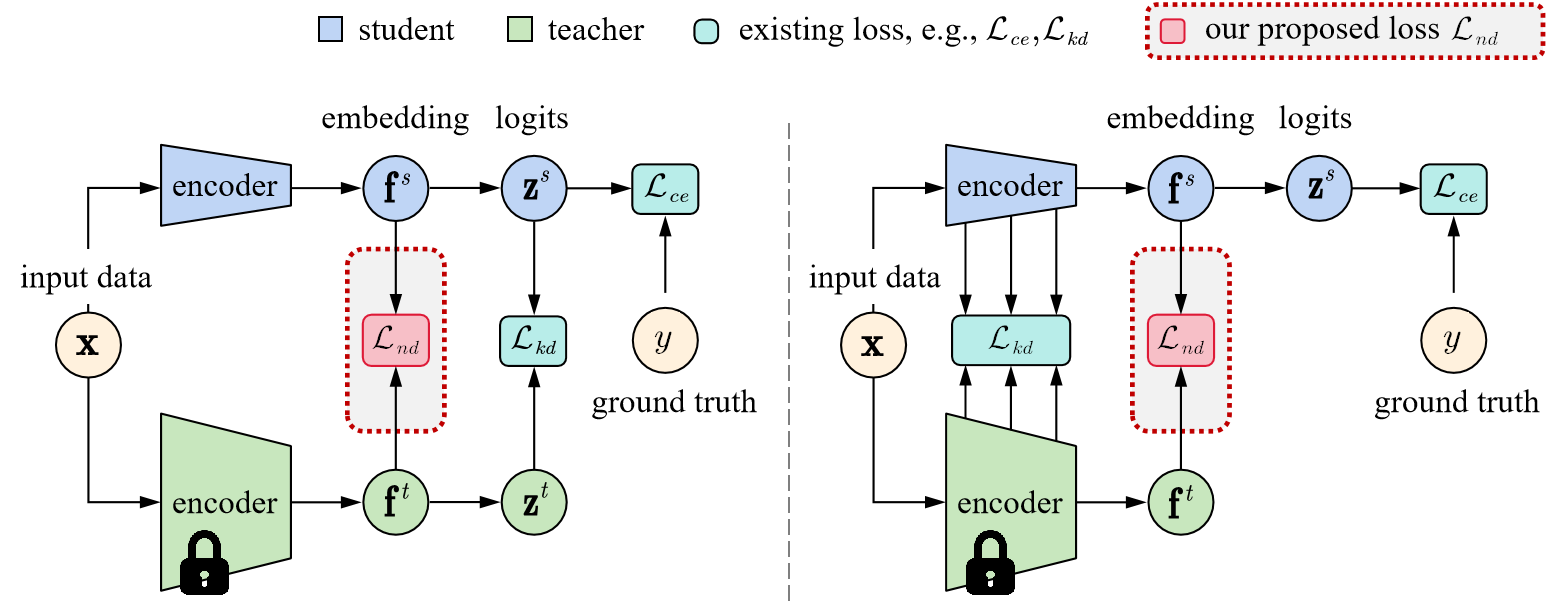

Knowledge distillation (KD) exploits a large well-trained model (i.e., teacher) to train a small student model on the same dataset for the same task. Treating teacher features as knowledge, prevailing methods of knowledge distillation train student by aligning its features with the teacher's, e.g., by minimizing the KL-divergence between their logits or L2 distance between their intermediate features. While it is natural to believe that better alignment of student features to the teacher better distills teacher knowledge, simply forcing this alignment does not directly contribute to the student's performance, e.g., classification accuracy. In this work, we propose to align student features with class-mean of teacher features, where class-mean naturally serves as a strong classifier. To this end, we explore baseline techniques such as adopting the cosine distance based loss to encourage the similarity between student features and their corresponding class-means of the teacher. Moreover, we train the student to produce large-norm features, inspired by other lines of work (e.g., model pruning and domain adaptation), which find the large-norm features to be more significant. Finally, we propose a rather simple loss term (dubbed ND loss) to simultaneously (1) encourage student to produce large-\emph{norm} features, and (2) align the \emph{direction} of student features and teacher class-means. Experiments on standard benchmarks demonstrate that our explored techniques help existing KD methods achieve better performance, i.e., higher classification accuracy on ImageNet and CIFAR100 datasets, and higher detection precision on COCO dataset. Importantly, our proposed ND loss helps the most, leading to the state-of-the-art performance on these benchmarks. The source code is available at \url{https://github.com/WangYZ1608/Knowledge-Distillation-via-ND}.

PDF Abstract

CIFAR-10

CIFAR-10

ImageNet

ImageNet

MS COCO

MS COCO

CIFAR-100

CIFAR-100