Insights into LSTM Fully Convolutional Networks for Time Series Classification

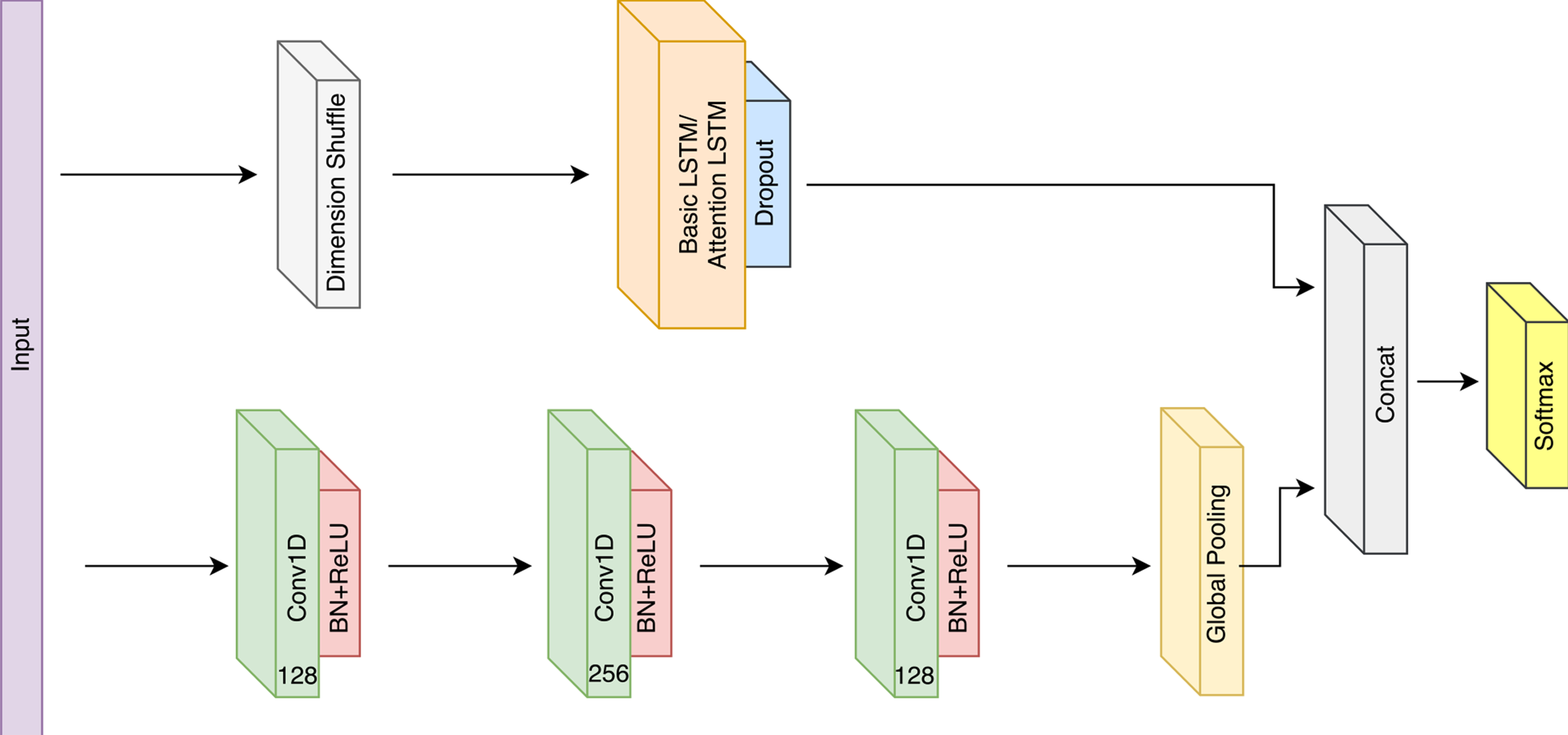

Long Short Term Memory Fully Convolutional Neural Networks (LSTM-FCN) and Attention LSTM-FCN (ALSTM-FCN) have shown to achieve state-of-the-art performance on the task of classifying time series signals on the old University of California-Riverside (UCR) time series repository. However, there has been no study on why LSTM-FCN and ALSTM-FCN perform well. In this paper, we perform a series of ablation tests (3627 experiments) on LSTM-FCN and ALSTM-FCN to provide a better understanding of the model and each of its sub-module. Results from the ablation tests on ALSTM-FCN and LSTM-FCN show that the LSTM and the FCN blocks perform better when applied in a conjoined manner. Two z-normalizing techniques, z-normalizing each sample independently and z-normalizing the whole dataset, are compared using a Wilcoxson signed-rank test to show a statistical difference in performance. In addition, we provide an understanding of the impact dimension shuffle has on LSTM-FCN by comparing its performance with LSTM-FCN when no dimension shuffle is applied. Finally, we demonstrate the performance of the LSTM-FCN when the LSTM block is replaced by a GRU, basic RNN, and Dense Block.

PDF Abstract