Joint Learning of Motion Estimation and Segmentation for Cardiac MR Image Sequences

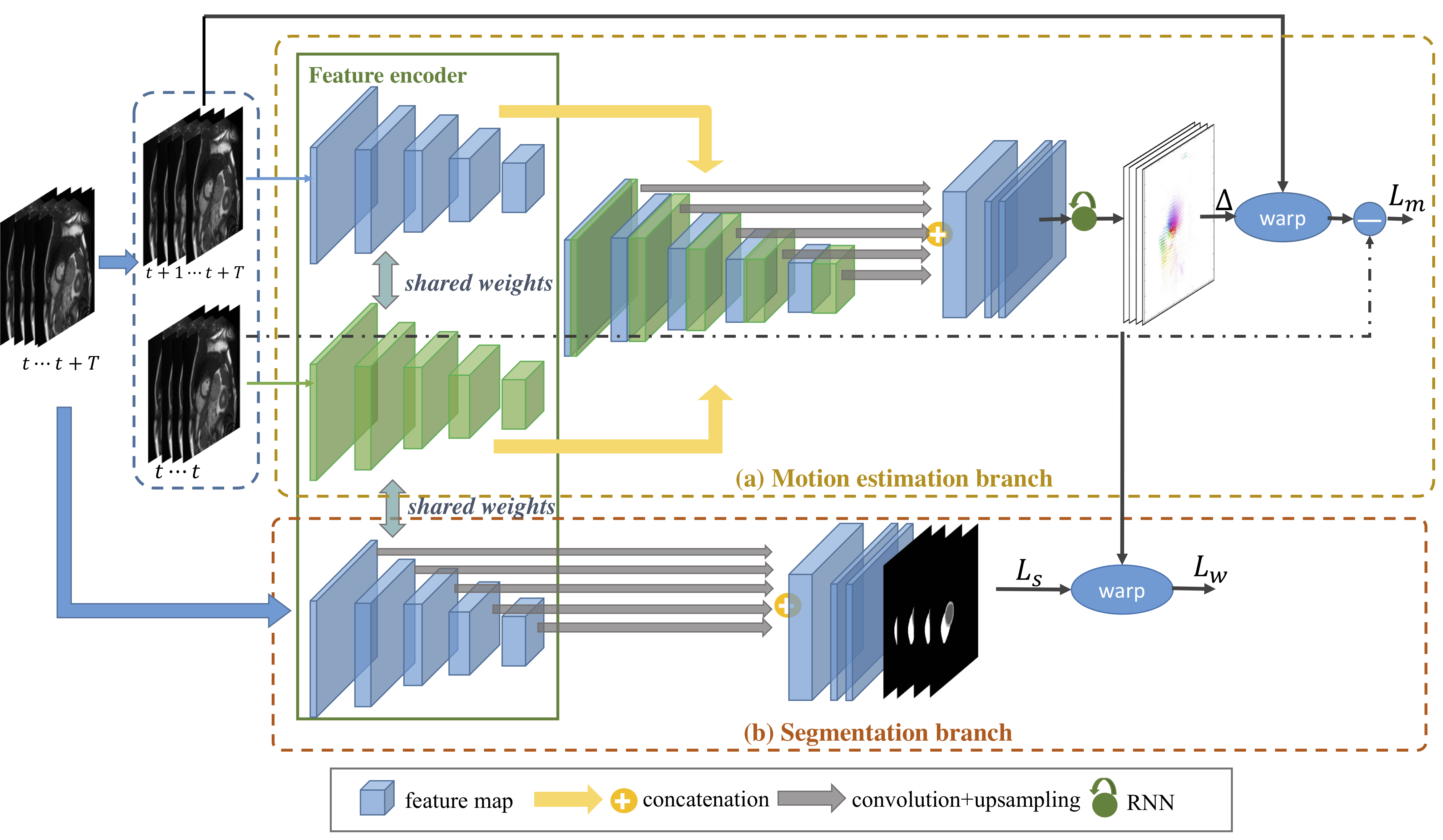

Cardiac motion estimation and segmentation play important roles in quantitatively assessing cardiac function and diagnosing cardiovascular diseases. In this paper, we propose a novel deep learning method for joint estimation of motion and segmentation from cardiac MR image sequences. The proposed network consists of two branches: a cardiac motion estimation branch which is built on a novel unsupervised Siamese style recurrent spatial transformer network, and a cardiac segmentation branch that is based on a fully convolutional network. In particular, a joint multi-scale feature encoder is learned by optimizing the segmentation branch and the motion estimation branch simultaneously. This enables the weakly-supervised segmentation by taking advantage of features that are unsupervisedly learned in the motion estimation branch from a large amount of unannotated data. Experimental results using cardiac MRI images from 220 subjects show that the joint learning of both tasks is complementary and the proposed models outperform the competing methods significantly in terms of accuracy and speed.

PDF Abstract