Keep it Simple: Image Statistics Matching for Domain Adaptation

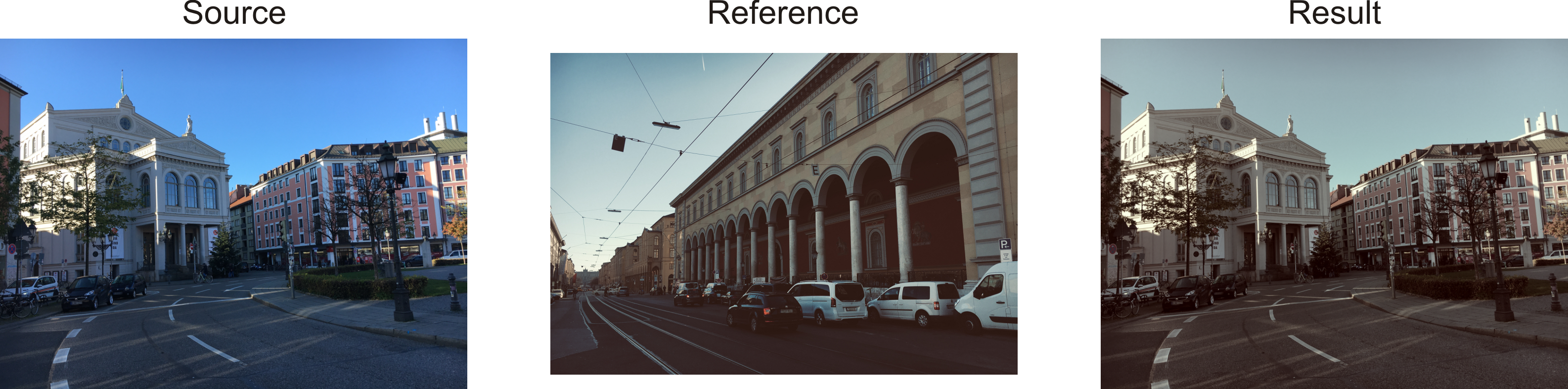

Applying an object detector, which is neither trained nor fine-tuned on data close to the final application, often leads to a substantial performance drop. In order to overcome this problem, it is necessary to consider a shift between source and target domains. Tackling the shift is known as Domain Adaptation (DA). In this work, we focus on unsupervised DA: maintaining the detection accuracy across different data distributions, when only unlabeled images are available of the target domain. Recent state-of-the-art methods try to reduce the domain gap using an adversarial training strategy which increases the performance but at the same time the complexity of the training procedure. In contrast, we look at the problem from a new perspective and keep it simple by solely matching image statistics between source and target domain. We propose to align either color histograms or mean and covariance of the source images towards the target domain. Hence, DA is accomplished without architectural add-ons and additional hyper-parameters. The benefit of the approaches is demonstrated by evaluating different domain shift scenarios on public data sets. In comparison to recent methods, we achieve state-of-the-art performance using a much simpler procedure for the training. Additionally, we show that applying our techniques significantly reduces the amount of synthetic data needed to learn a general model and thus increases the value of simulation.

PDF Abstract

Cityscapes

Cityscapes

KITTI

KITTI

Foggy Cityscapes

Foggy Cityscapes

Sim10k

Sim10k