Learning Universal Adversarial Perturbations with Generative Models

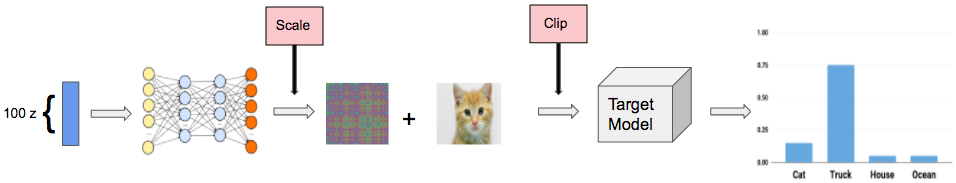

Neural networks are known to be vulnerable to adversarial examples, inputs that have been intentionally perturbed to remain visually similar to the source input, but cause a misclassification. It was recently shown that given a dataset and classifier, there exists so called universal adversarial perturbations, a single perturbation that causes a misclassification when applied to any input. In this work, we introduce universal adversarial networks, a generative network that is capable of fooling a target classifier when it's generated output is added to a clean sample from a dataset. We show that this technique improves on known universal adversarial attacks.

PDF AbstractCode

Tasks

Datasets

Results from the Paper

Ranked #8 on

Graph Classification

on NCI1

(using extra training data)

Ranked #8 on

Graph Classification

on NCI1

(using extra training data)

| Task | Dataset | Model | Metric Name | Metric Value | Global Rank | Uses Extra Training Data |

Benchmark |

|---|---|---|---|---|---|---|---|

| Graph Classification | NCI1 | DUGNN | Accuracy | 85.50% | # 8 |