Longitudinal Data and a Semantic Similarity Reward for Chest X-Ray Report Generation

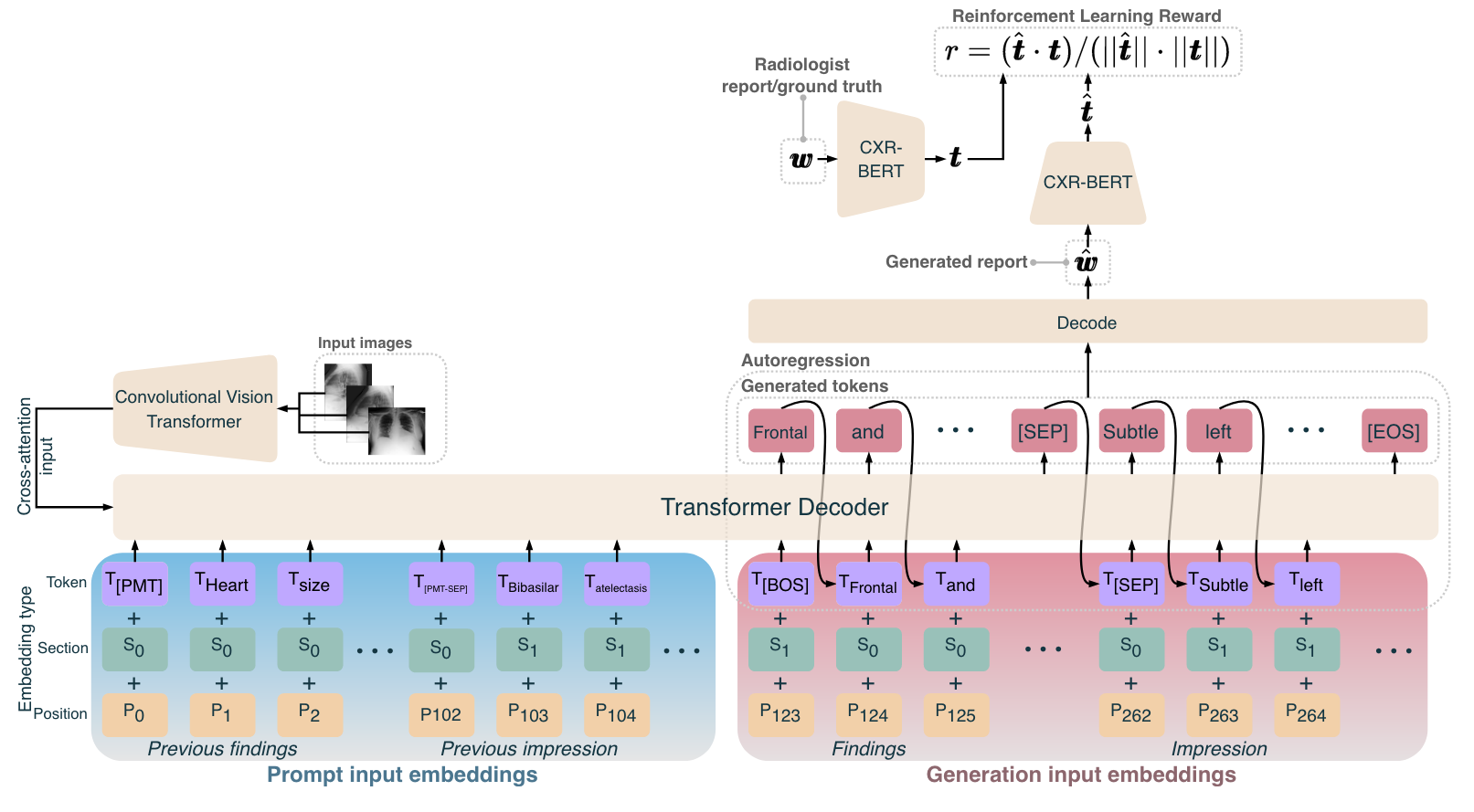

The burnout rate of radiologists is high in part due to the large and ever growing number of Chest X-rays (CXRs) needing interpretation and reporting. Promisingly, automatic CXR report generation has the potential to aid radiologists with this laborious task and improve patient care. The diagnostic inaccuracy of current CXR report generators prevents their consideration for clinical trials. To improve diagnostic accuracy, we propose a CXR report generator that integrates aspects of the radiologist workflow and is trained with our proposed reward for reinforcement learning. It imitates the radiologist workflow by conditioning on the longitudinal history available from a patient's previous CXR study, conditioning on multiple CXRs from a patient's study, and differentiating between report sections with section embeddings and separator tokens. Our reward for reinforcement learning leverages CXR-BERT -- which captures the clinical semantic similarity between reports. Training with this reward forces our model to learn the clinical semantics of radiology reporting. We also highlight issues with the evaluation of a large portion of CXR report generation models in the literature introduced by excessive formatting. We conduct experiments on the publicly available MIMIC-CXR and IU X-ray datasets with metrics shown to be more closely correlated with radiologists' assessment of reporting. The results demonstrate that our model generates radiology reports that are quantitatively more aligned with those of radiologists than state-of-the-art models, such as those utilising large language models, reinforcement learning, and multi-task learning. Through this, our model brings CXR report generation one step closer to clinical trial consideration. Our Hugging Face checkpoint (https://huggingface.co/aehrc/cxrmate) and code (https://github.com/aehrc/cxrmate) are publicly available.

PDF Abstract

CheXpert

CheXpert