Meta-Learning based Degradation Representation for Blind Super-Resolution

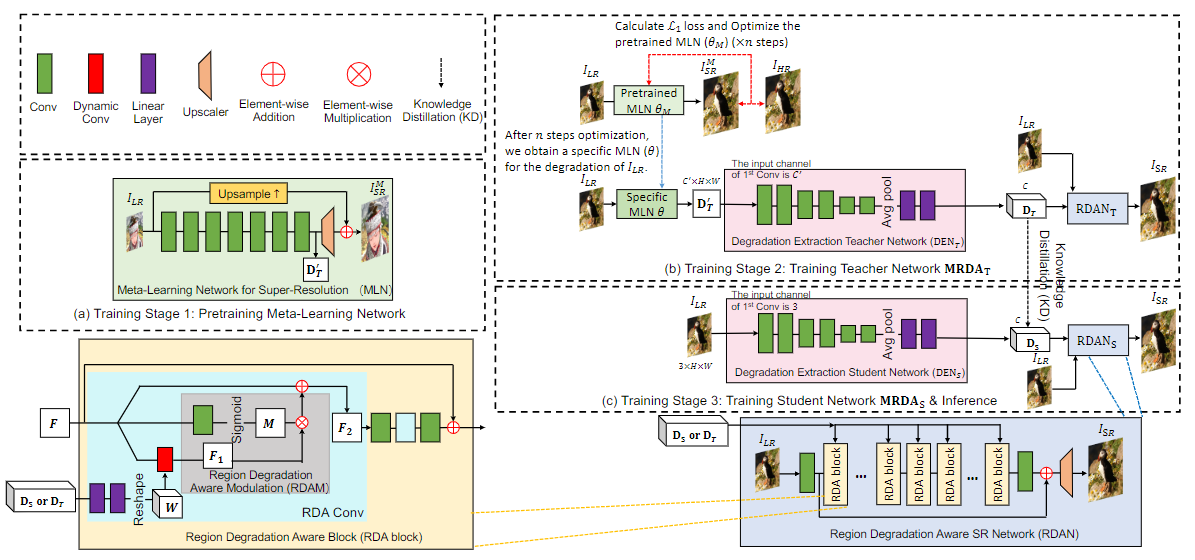

The most of CNN based super-resolution (SR) methods assume that the degradation is known (\eg, bicubic). These methods will suffer a severe performance drop when the degradation is different from their assumption. Therefore, some approaches attempt to train SR networks with the complex combination of multiple degradations to cover the real degradation space. To adapt to multiple unknown degradations, introducing an explicit degradation estimator can actually facilitate SR performance. However, previous explicit degradation estimation methods usually predict Gaussian blur with the supervision of groundtruth blur kernels, and estimation errors may lead to SR failure. Thus, it is necessary to design a method that can extract implicit discriminative degradation representation. To this end, we propose a Meta-Learning based Region Degradation Aware SR Network (MRDA), including Meta-Learning Network (MLN), Degradation Extraction Network (DEN), and Region Degradation Aware SR Network (RDAN). To handle the lack of groundtruth degradation, we use the MLN to rapidly adapt to the specific complex degradation after several iterations and extract implicit degradation information. Subsequently, a teacher network MRDA$_{T}$ is designed to further utilize the degradation information extracted by MLN for SR. However, MLN requires iterating on paired low-resolution (LR) and corresponding high-resolution (HR) images, which is unavailable in the inference phase. Therefore, we adopt knowledge distillation (KD) to make the student network learn to directly extract the same implicit degradation representation (IDR) as the teacher from LR images.

PDF Abstract

BSD

BSD

DIV2K

DIV2K

Urban100

Urban100

Set14

Set14

Set5

Set5

Manga109

Manga109

RealSRSet

RealSRSet