MoTCoder: Elevating Large Language Models with Modular of Thought for Challenging Programming Tasks

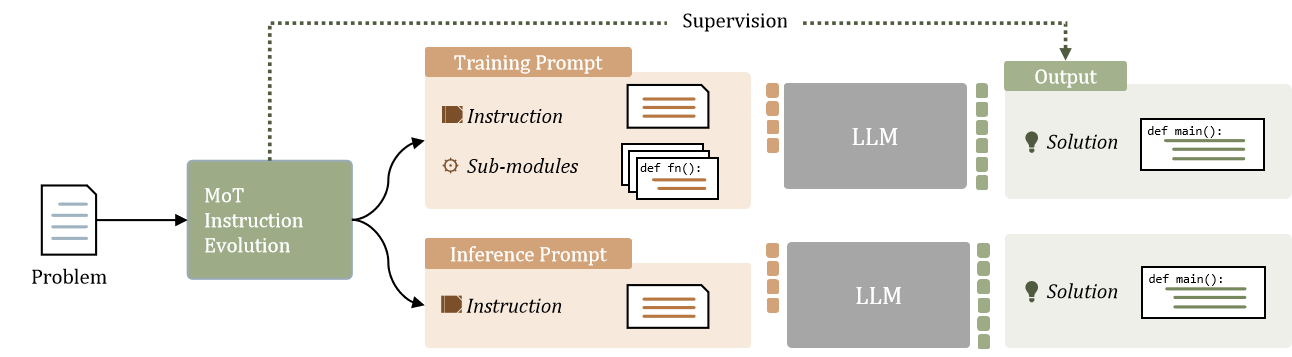

Large Language Models (LLMs) have showcased impressive capabilities in handling straightforward programming tasks. However, their performance tends to falter when confronted with more challenging programming problems. We observe that conventional models often generate solutions as monolithic code blocks, restricting their effectiveness in tackling intricate questions. To overcome this limitation, we present Modular-of-Thought Coder (MoTCoder). We introduce a pioneering framework for MoT instruction tuning, designed to promote the decomposition of tasks into logical sub-tasks and sub-modules. Our investigations reveal that, through the cultivation and utilization of sub-modules, MoTCoder significantly improves both the modularity and correctness of the generated solutions, leading to substantial relative pass@1 improvements of 12.9% on APPS and 9.43% on CodeContests. Our codes are available at https://github.com/dvlab-research/MoTCoder.

PDF AbstractCode

Tasks

Datasets

Results from the Paper

Ranked #1 on

Code Generation

on CodeContests

(Test Set pass@1 metric)

Ranked #1 on

Code Generation

on CodeContests

(Test Set pass@1 metric)

| Task | Dataset | Model | Metric Name | Metric Value | Global Rank | Benchmark |

|---|---|---|---|---|---|---|

| Code Generation | APPS | MoTCoder-15b | Introductory Pass@1 | 33.80 | # 2 | |

| Interview Pass@1 | 19.70 | # 1 | ||||

| Competition Pass@1 | 11.09 | # 1 | ||||

| Code Generation | CodeContests | MoTCoder-15B | Test Set pass@1 | 2.39 | # 1 | |

| Test Set pass@5 | 7.69 | # 1 | ||||

| Val Set pass@1 | 6.18 | # 1 | ||||

| Val Set pass@5 | 12.73 | # 1 |

APPS

APPS