Motion Fused Frames: Data Level Fusion Strategy for Hand Gesture Recognition

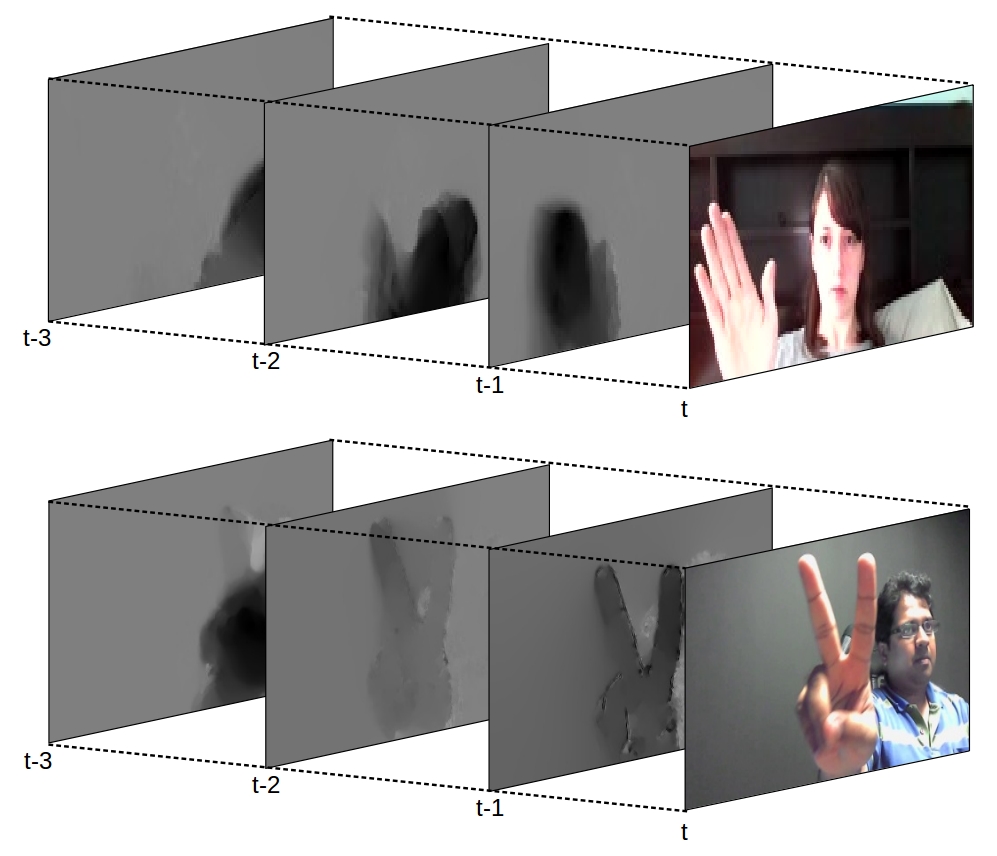

Acquiring spatio-temporal states of an action is the most crucial step for action classification. In this paper, we propose a data level fusion strategy, Motion Fused Frames (MFFs), designed to fuse motion information into static images as better representatives of spatio-temporal states of an action. MFFs can be used as input to any deep learning architecture with very little modification on the network. We evaluate MFFs on hand gesture recognition tasks using three video datasets - Jester, ChaLearn LAP IsoGD and NVIDIA Dynamic Hand Gesture Datasets - which require capturing long-term temporal relations of hand movements. Our approach obtains very competitive performance on Jester and ChaLearn benchmarks with the classification accuracies of 96.28% and 57.4%, respectively, while achieving state-of-the-art performance with 84.7% accuracy on NVIDIA benchmark.

PDF Abstract

NVGesture

NVGesture

Jester (Gesture Recognition)

Jester (Gesture Recognition)