Multi-Domain Active Learning: Literature Review and Comparative Study

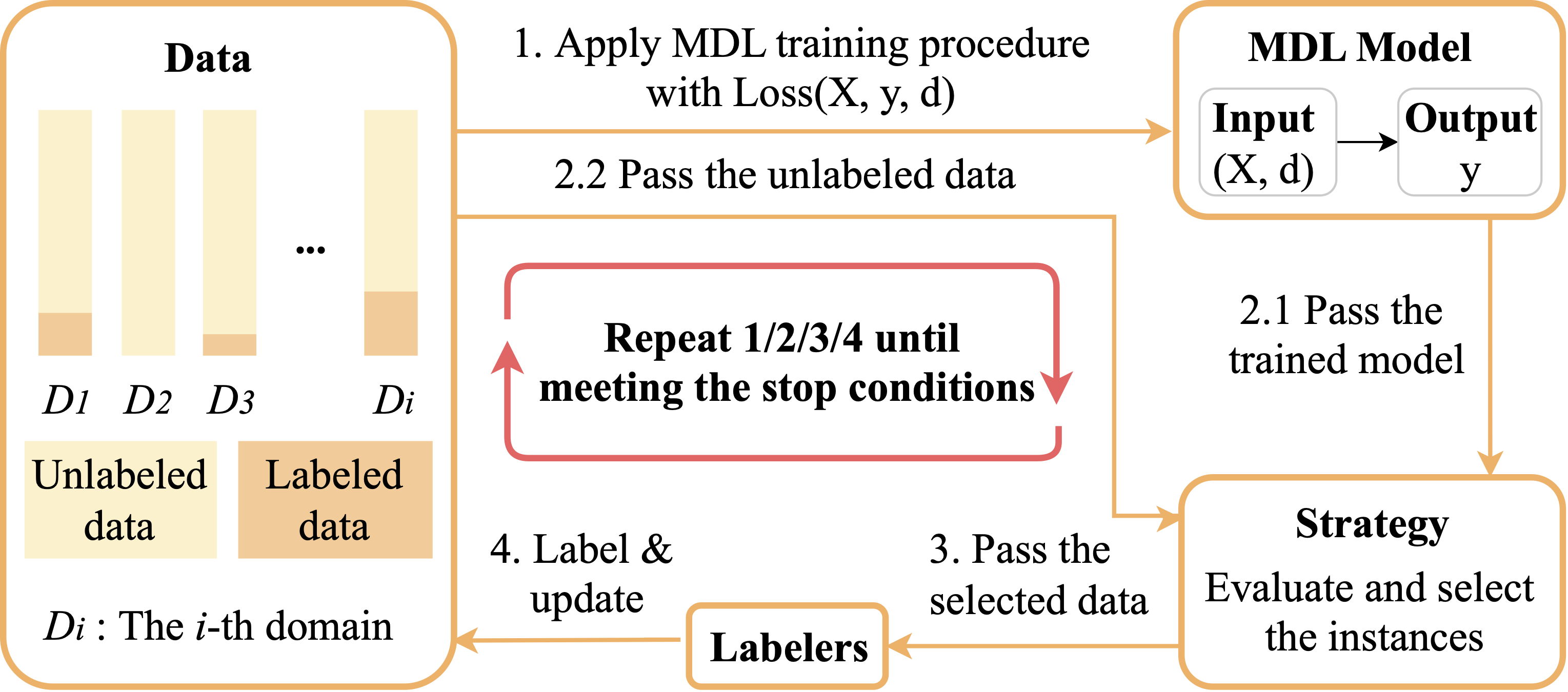

Multi-domain learning (MDL) refers to learning a set of models simultaneously, where each model is specialized to perform a task in a particular domain. Generally, a high labeling effort is required in MDL, as data needs to be labeled by human experts for every domain. Active learning (AL) can be utilized in MDL to reduce the labeling effort by only using the most informative data. The resultant paradigm is termed multi-domain active learning (MDAL). In this work, we provide an exhaustive literature review for MDAL on the relevant fields, including AL, cross-domain information sharing schemes, and cross-domain instance evaluation approaches. It is found that the few studies which have been directly conducted on MDAL cannot serve as off-the-shelf solutions on more general MDAL tasks. To fill this gap, we construct a pipeline of MDAL and present a comprehensive comparative study of thirty different algorithms, which are established by combining six representative MDL models and five commonly used AL strategies. We evaluate the algorithms on six datasets involving textual and visual classification tasks. In most cases, AL brings notable improvements to MDL, and the naive BvSB (best vs. second best) Uncertainty strategy can perform competitively with the state-of-the-art AL strategies. Besides, BvSB with the MAN (multinomial adversarial networks) model can consistently achieve top or above-average performance on all the datasets. Furthermore, we qualitatively analyze the behaviors of the well-performed strategies and models, shedding light on their superior performance in the comparison. Finally, we recommend using BvSB with the MAN model in the application of MDAL due to their good performance in the experiments.

PDF Abstract

MNIST

MNIST

Office-Home

Office-Home

PACS

PACS

MNIST-M

MNIST-M