Clustered Federated Learning via Generalized Total Variation Minimization

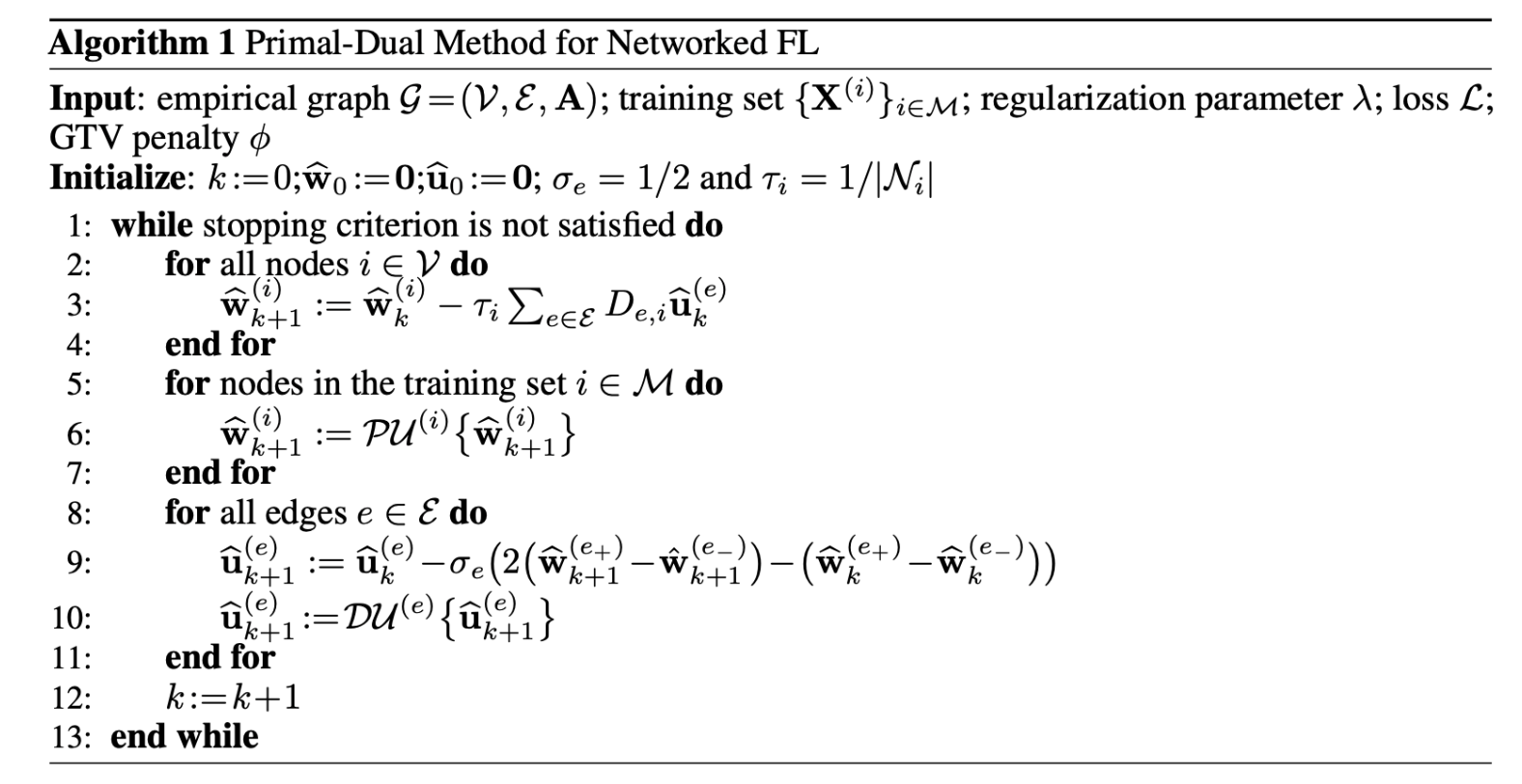

We study optimization methods to train local (or personalized) models for decentralized collections of local datasets with an intrinsic network structure. This network structure arises from domain-specific notions of similarity between local datasets. Examples for such notions include spatio-temporal proximity, statistical dependencies or functional relations. Our main conceptual contribution is to formulate federated learning as generalized total variation (GTV) minimization. This formulation unifies and considerably extends existing federated learning methods. It is highly flexible and can be combined with a broad range of parametric models, including generalized linear models or deep neural networks. Our main algorithmic contribution is a fully decentralized federated learning algorithm. This algorithm is obtained by applying an established primal-dual method to solve GTV minimization. It can be implemented as message passing and is robust against inexact computations that arise from limited computational resources including processing time or bandwidth. Our main analytic contribution is an upper bound on the deviation between the local model parameters learnt by our algorithm and an oracle-based clustered federated learning method. This upper bound reveals conditions on the local models and the network structure of local datasets such that GTV minimization is able to pool (nearly) homogeneous local datasets.

PDF Abstract

MNIST

MNIST