Neural Random Subspace

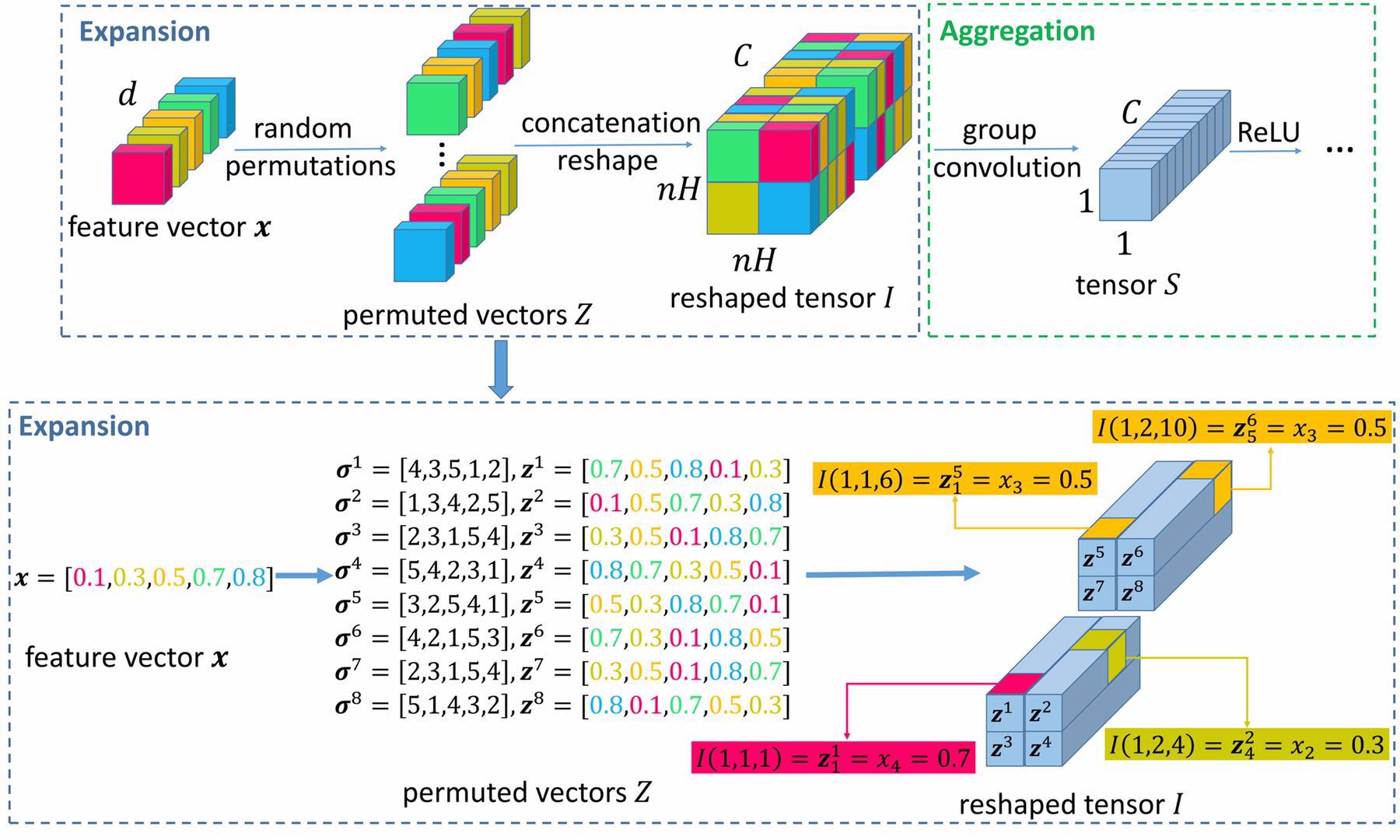

The random subspace method, known as the pillar of random forests, is good at making precise and robust predictions. However, there is not a straightforward way yet to combine it with deep learning. In this paper, we therefore propose Neural Random Subspace (NRS), a novel deep learning based random subspace method. In contrast to previous forest methods, NRS enjoys the benefits of end-to-end, data-driven representation learning, as well as pervasive support from deep learning software and hardware platforms, hence achieving faster inference speed and higher accuracy. Furthermore, as a non-linear component to be encoded into Convolutional Neural Networks (CNNs), NRS learns non-linear feature representations in CNNs more efficiently than previous higher-order pooling methods, producing good results with negligible increase in parameters, floating point operations (FLOPs) and real running time. Compared with random subspaces, random forests and gradient boosting decision trees (GBDTs), NRS achieves superior performance on 35 machine learning datasets. Moreover, on both 2D image and 3D point cloud recognition tasks, integration of NRS with CNN architectures achieves consistent improvements with minor extra cost. Code is available at https://github.com/CupidJay/NRS_pytorch.

PDF Abstract

CIFAR-10

CIFAR-10

CIFAR-100

CIFAR-100

ModelNet

ModelNet

Stanford Cars

Stanford Cars

FGVC-Aircraft

FGVC-Aircraft