Maximum Likelihood Training of Score-Based Diffusion Models

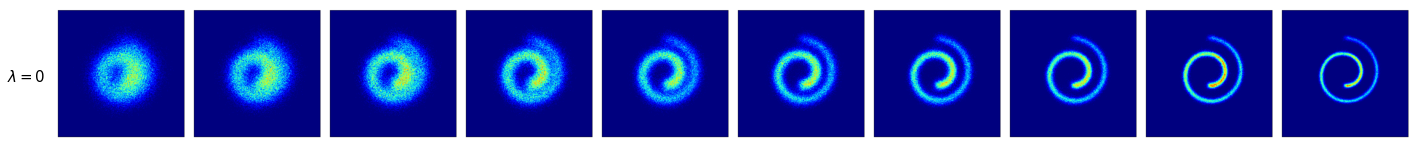

Score-based diffusion models synthesize samples by reversing a stochastic process that diffuses data to noise, and are trained by minimizing a weighted combination of score matching losses. The log-likelihood of score-based diffusion models can be tractably computed through a connection to continuous normalizing flows, but log-likelihood is not directly optimized by the weighted combination of score matching losses. We show that for a specific weighting scheme, the objective upper bounds the negative log-likelihood, thus enabling approximate maximum likelihood training of score-based diffusion models. We empirically observe that maximum likelihood training consistently improves the likelihood of score-based diffusion models across multiple datasets, stochastic processes, and model architectures. Our best models achieve negative log-likelihoods of 2.83 and 3.76 bits/dim on CIFAR-10 and ImageNet 32x32 without any data augmentation, on a par with state-of-the-art autoregressive models on these tasks.

PDF Abstract NeurIPS 2021 PDF NeurIPS 2021 AbstractDatasets

Results from the Paper

Ranked #6 on

Image Generation

on ImageNet 32x32

(bpd metric)

Ranked #6 on

Image Generation

on ImageNet 32x32

(bpd metric)