PADS: Policy-Adapted Sampling for Visual Similarity Learning

Learning visual similarity requires to learn relations, typically between triplets of images. Albeit triplet approaches being powerful, their computational complexity mostly limits training to only a subset of all possible training triplets. Thus, sampling strategies that decide when to use which training sample during learning are crucial. Currently, the prominent paradigm are fixed or curriculum sampling strategies that are predefined before training starts. However, the problem truly calls for a sampling process that adjusts based on the actual state of the similarity representation during training. We, therefore, employ reinforcement learning and have a teacher network adjust the sampling distribution based on the current state of the learner network, which represents visual similarity. Experiments on benchmark datasets using standard triplet-based losses show that our adaptive sampling strategy significantly outperforms fixed sampling strategies. Moreover, although our adaptive sampling is only applied on top of basic triplet-learning frameworks, we reach competitive results to state-of-the-art approaches that employ diverse additional learning signals or strong ensemble architectures. Code can be found under https://github.com/Confusezius/CVPR2020_PADS.

PDF Abstract CVPR 2020 PDF CVPR 2020 AbstractTasks

Datasets

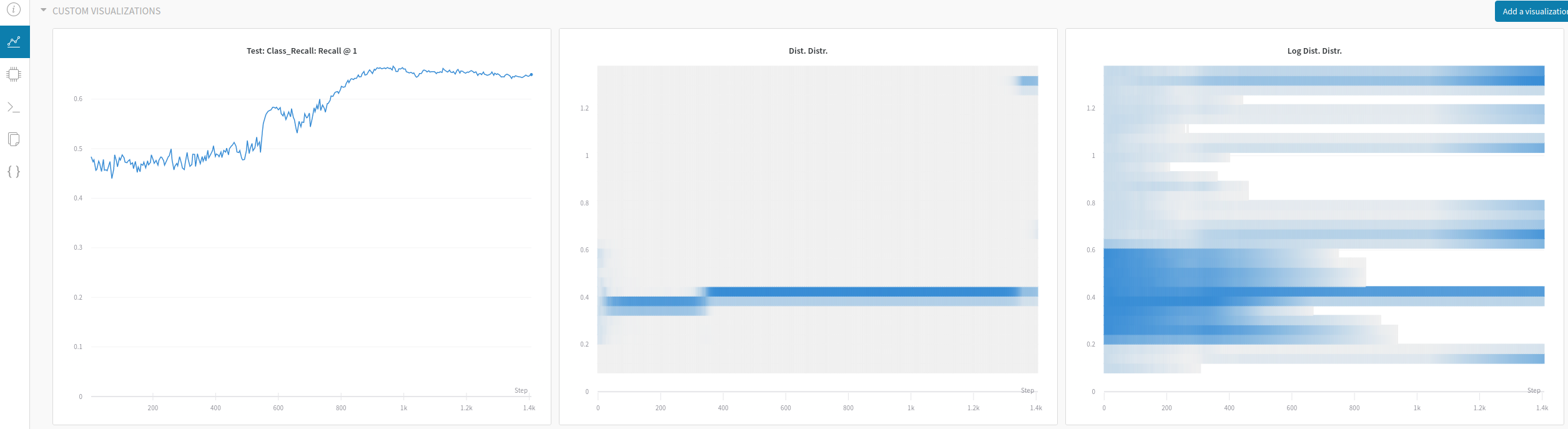

Results from the Paper

Ranked #17 on

Metric Learning

on CUB-200-2011

(using extra training data)

Ranked #17 on

Metric Learning

on CUB-200-2011

(using extra training data)

CUB-200-2011

CUB-200-2011

Stanford Online Products

Stanford Online Products