Permutation invariance and uncertainty in multitemporal image super-resolution

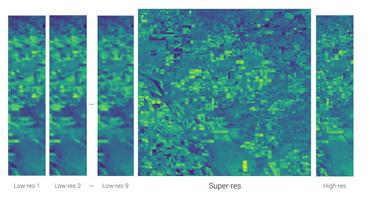

Recent advances have shown how deep neural networks can be extremely effective at super-resolving remote sensing imagery, starting from a multitemporal collection of low-resolution images. However, existing models have neglected the issue of temporal permutation, whereby the temporal ordering of the input images does not carry any relevant information for the super-resolution task and causes such models to be inefficient with the, often scarce, ground truth data that available for training. Thus, models ought not to learn feature extractors that rely on temporal ordering. In this paper, we show how building a model that is fully invariant to temporal permutation significantly improves performance and data efficiency. Moreover, we study how to quantify the uncertainty of the super-resolved image so that the final user is informed on the local quality of the product. We show how uncertainty correlates with temporal variation in the series, and how quantifying it further improves model performance. Experiments on the Proba-V challenge dataset show significant improvements over the state of the art without the need for self-ensembling, as well as improved data efficiency, reaching the performance of the challenge winner with just 25% of the training data.

PDF Abstract

PROBA-V

PROBA-V