RESA: Recurrent Feature-Shift Aggregator for Lane Detection

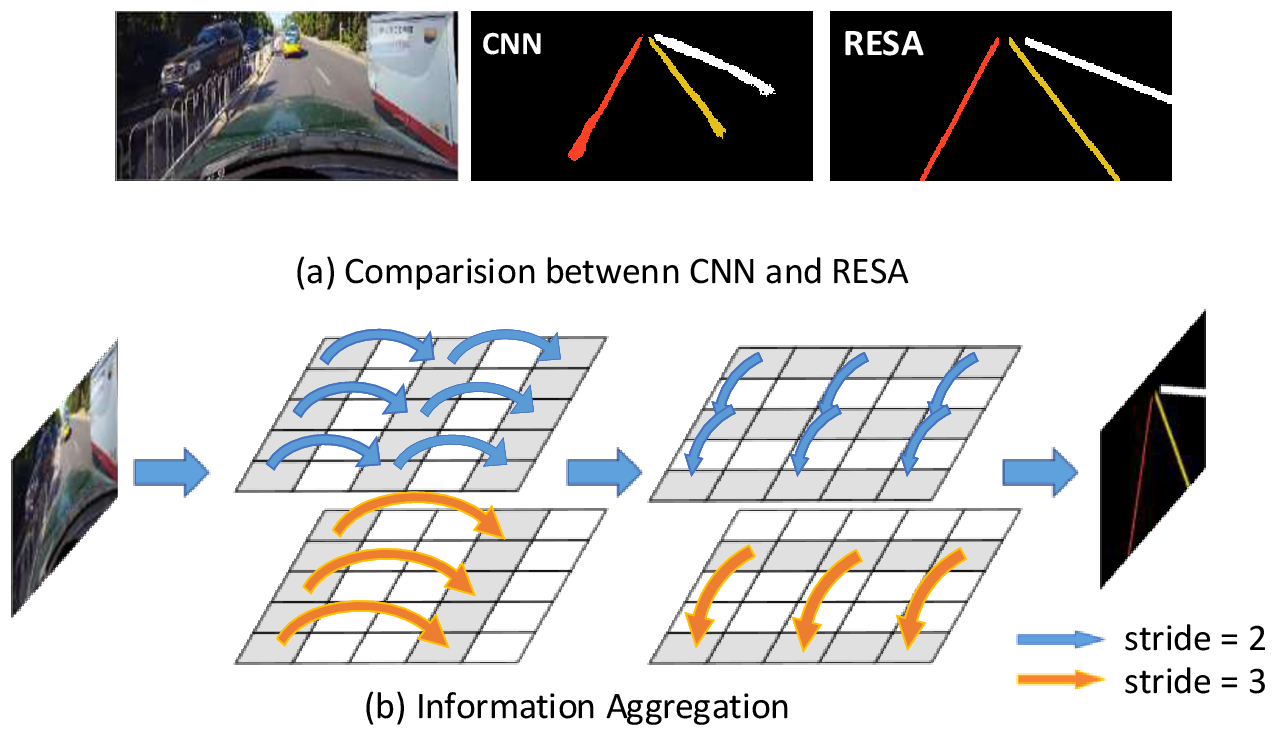

Lane detection is one of the most important tasks in self-driving. Due to various complex scenarios (e.g., severe occlusion, ambiguous lanes, etc.) and the sparse supervisory signals inherent in lane annotations, lane detection task is still challenging. Thus, it is difficult for the ordinary convolutional neural network (CNN) to train in general scenes to catch subtle lane feature from the raw image. In this paper, we present a novel module named REcurrent Feature-Shift Aggregator (RESA) to enrich lane feature after preliminary feature extraction with an ordinary CNN. RESA takes advantage of strong shape priors of lanes and captures spatial relationships of pixels across rows and columns. It shifts sliced feature map recurrently in vertical and horizontal directions and enables each pixel to gather global information. RESA can conjecture lanes accurately in challenging scenarios with weak appearance clues by aggregating sliced feature map. Moreover, we propose a Bilateral Up-Sampling Decoder that combines coarse-grained and fine-detailed features in the up-sampling stage. It can recover the low-resolution feature map into pixel-wise prediction meticulously. Our method achieves state-of-the-art results on two popular lane detection benchmarks (CULane and Tusimple). Code has been made available at: https://github.com/ZJULearning/resa.

PDF Abstract

CULane

CULane

TuSimple

TuSimple