Robust Double-Encoder Network for RGB-D Panoptic Segmentation

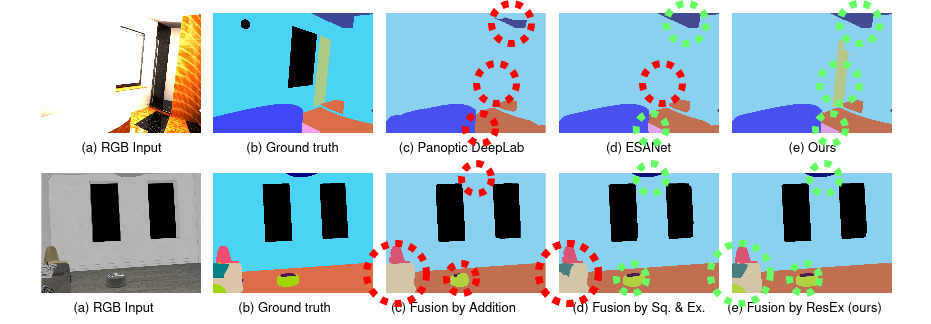

Perception is crucial for robots that act in real-world environments, as autonomous systems need to see and understand the world around them to act properly. Panoptic segmentation provides an interpretation of the scene by computing a pixelwise semantic label together with instance IDs. In this paper, we address panoptic segmentation using RGB-D data of indoor scenes. We propose a novel encoder-decoder neural network that processes RGB and depth separately through two encoders. The features of the individual encoders are progressively merged at different resolutions, such that the RGB features are enhanced using complementary depth information. We propose a novel merging approach called ResidualExcite, which reweighs each entry of the feature map according to its importance. With our double-encoder architecture, we are robust to missing cues. In particular, the same model can train and infer on RGB-D, RGB-only, and depth-only input data, without the need to train specialized models. We evaluate our method on publicly available datasets and show that our approach achieves superior results compared to other common approaches for panoptic segmentation.

PDF Abstract

ScanNet

ScanNet

Hypersim

Hypersim