Self-supervised Scale Equivariant Network for Weakly Supervised Semantic Segmentation

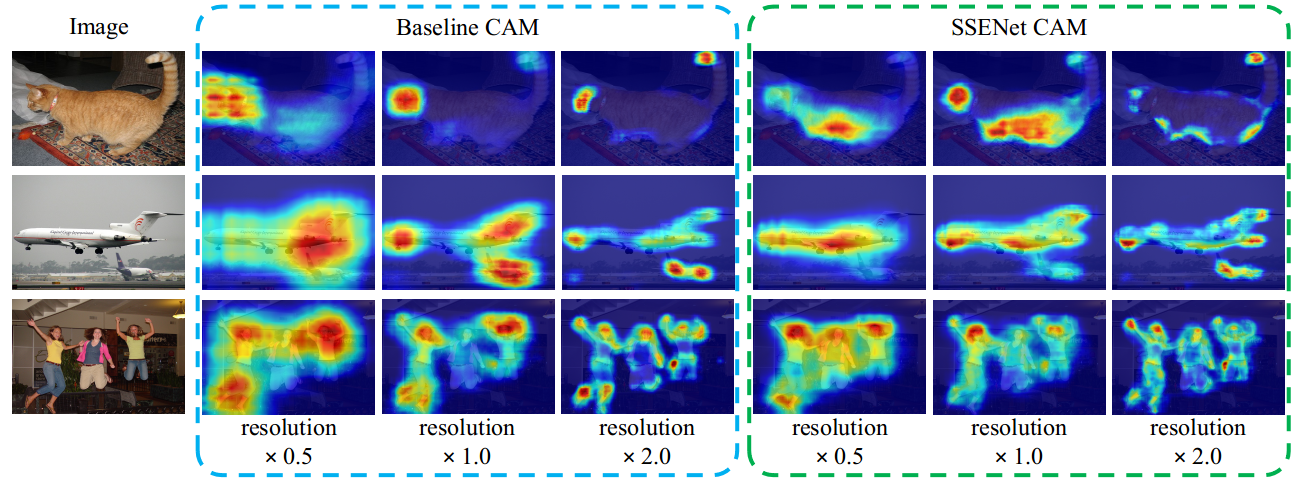

Weakly supervised semantic segmentation has attracted much research interest in recent years considering its advantage of low labeling cost. Most of the advanced algorithms follow the design principle that expands and constrains the seed regions from class activation maps (CAM). As well-known, conventional CAM tends to be incomplete or over-activated due to weak supervision. Fortunately, we find that semantic segmentation has a characteristic of spatial transformation equivariance, which can form a few self-supervisions to help weakly supervised learning. This work mainly explores the advantages of scale equivariant constrains for CAM generation, formulated as a self-supervised scale equivariant network (SSENet). Specifically, a novel scale equivariant regularization is elaborately designed to ensure consistency of CAMs from the same input image with different resolutions. This novel scale equivariant regularization can guide the whole network to learn more accurate class activation. This regularized CAM can be embedded in most recent advanced weakly supervised semantic segmentation framework. Extensive experiments on PASCAL VOC 2012 datasets demonstrate that our method achieves the state-of-the-art performance both quantitatively and qualitatively for weakly supervised semantic segmentation. Code has been made available.

PDF Abstract

ImageNet

ImageNet