Span-based Joint Entity and Relation Extraction with Transformer Pre-training

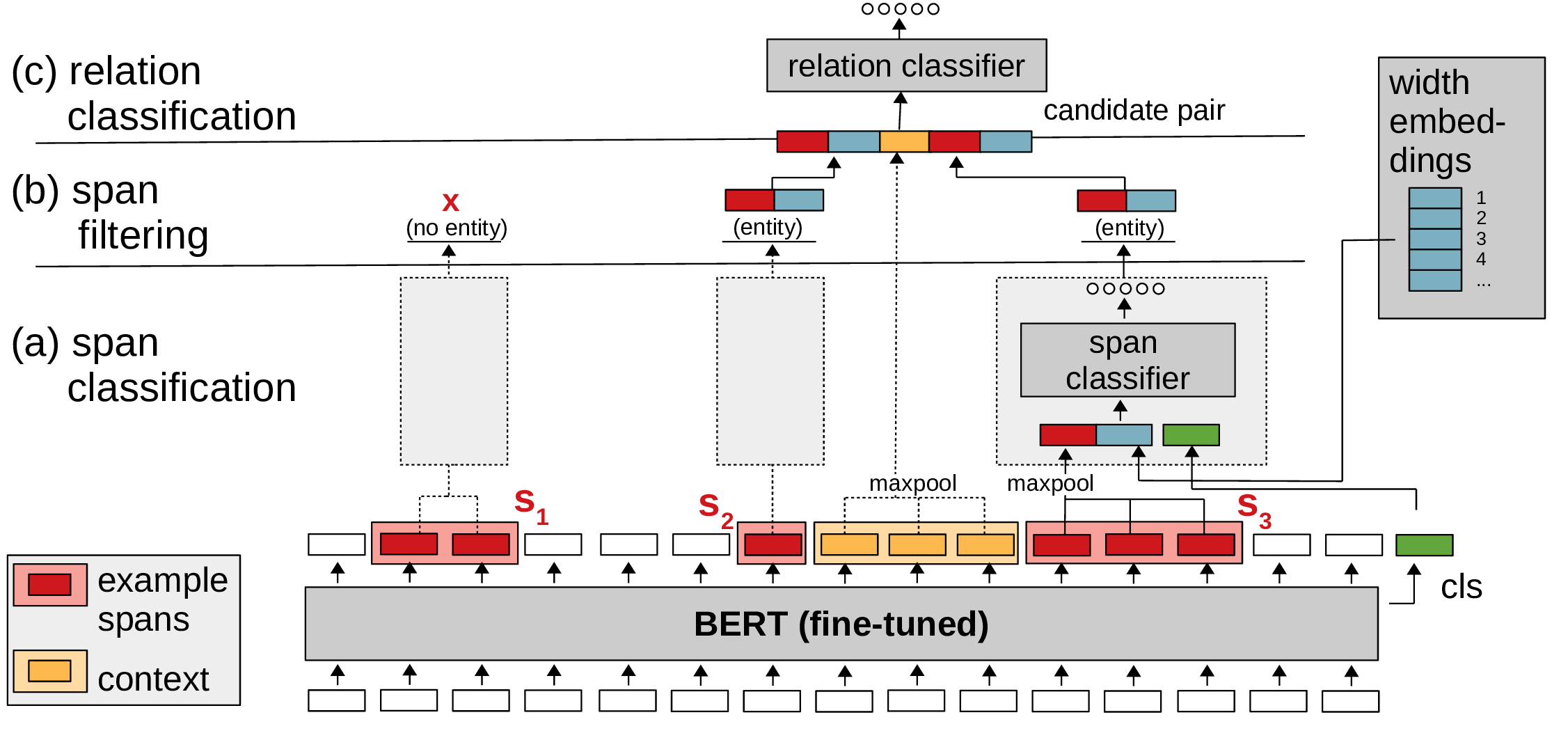

We introduce SpERT, an attention model for span-based joint entity and relation extraction. Our key contribution is a light-weight reasoning on BERT embeddings, which features entity recognition and filtering, as well as relation classification with a localized, marker-free context representation. The model is trained using strong within-sentence negative samples, which are efficiently extracted in a single BERT pass. These aspects facilitate a search over all spans in the sentence. In ablation studies, we demonstrate the benefits of pre-training, strong negative sampling and localized context. Our model outperforms prior work by up to 2.6% F1 score on several datasets for joint entity and relation extraction.

PDF AbstractCode

Results from the Paper

Ranked #1 on

Joint Entity and Relation Extraction

on SciERC

(Cross Sentence metric)

Ranked #1 on

Joint Entity and Relation Extraction

on SciERC

(Cross Sentence metric)

SciERC

SciERC