Targeted Nonlinear Adversarial Perturbations in Images and Videos

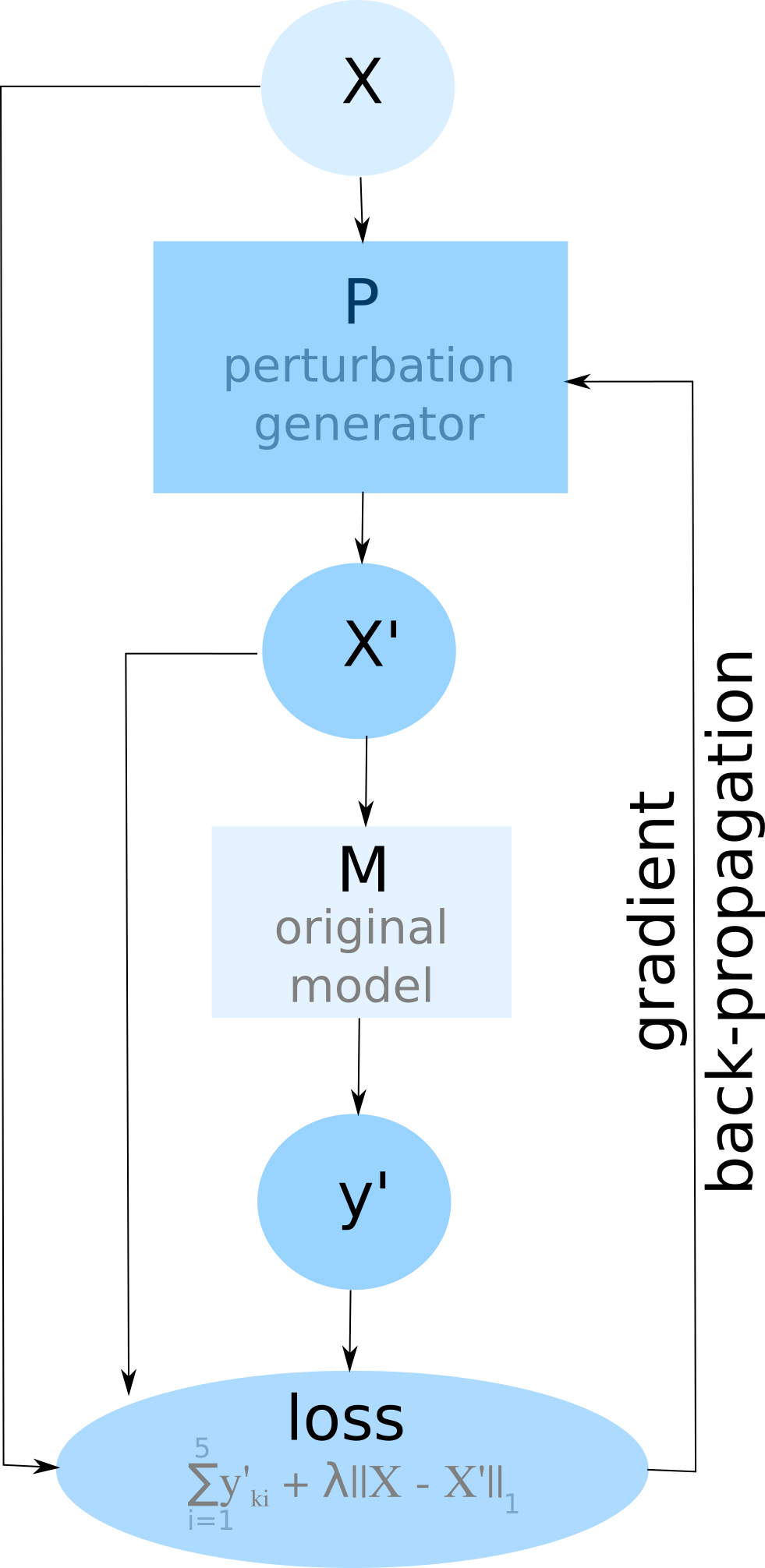

We introduce a method for learning adversarial perturbations targeted to individual images or videos. The learned perturbations are found to be sparse while at the same time containing a high level of feature detail. Thus, the extracted perturbations allow a form of object or action recognition and provide insights into what features the studied deep neural network models consider important when reaching their classification decisions. From an adversarial point of view, the sparse perturbations successfully confused the models into misclassifying, although the perturbed samples still belonged to the same original class by visual examination. This is discussed in terms of a prospective data augmentation scheme. The sparse yet high-quality perturbations may also be leveraged for image or video compression.

PDF Abstract