Text-to-SQL Empowered by Large Language Models: A Benchmark Evaluation

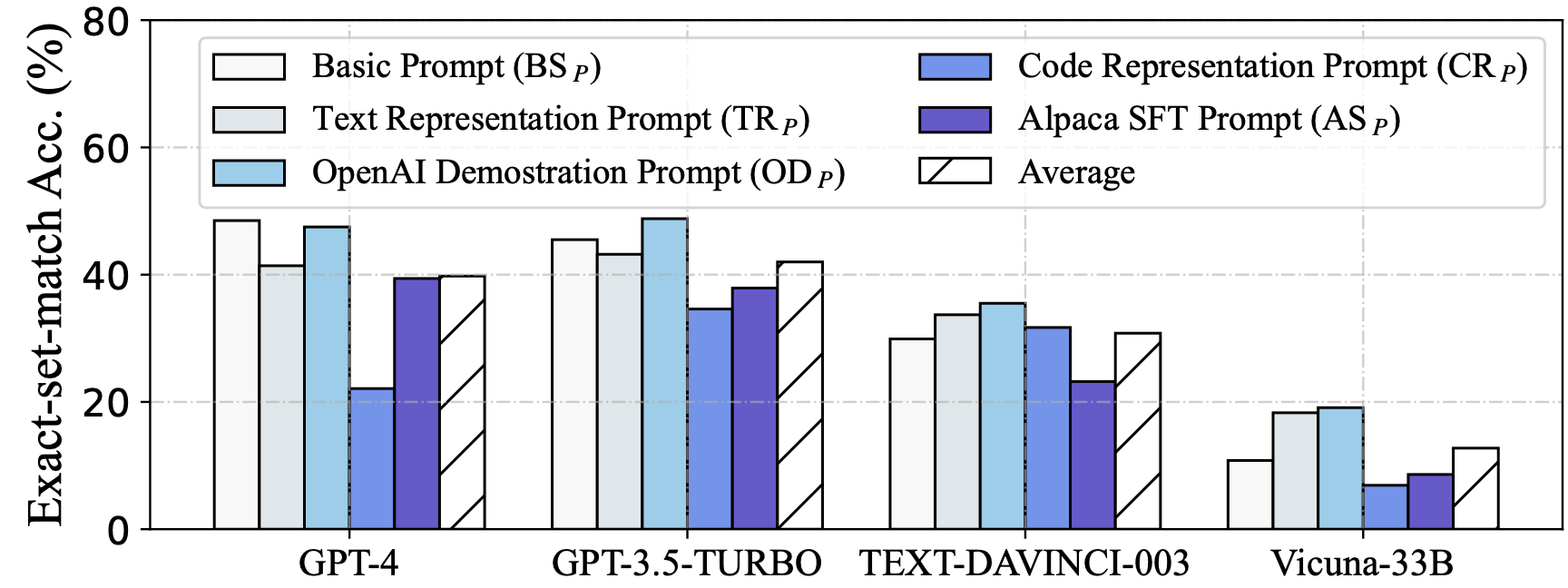

Large language models (LLMs) have emerged as a new paradigm for Text-to-SQL task. However, the absence of a systematical benchmark inhibits the development of designing effective, efficient and economic LLM-based Text-to-SQL solutions. To address this challenge, in this paper, we first conduct a systematical and extensive comparison over existing prompt engineering methods, including question representation, example selection and example organization, and with these experimental results, we elaborate their pros and cons. Based on these findings, we propose a new integrated solution, named DAIL-SQL, which refreshes the Spider leaderboard with 86.6% execution accuracy and sets a new bar. To explore the potential of open-source LLM, we investigate them in various scenarios, and further enhance their performance with supervised fine-tuning. Our explorations highlight open-source LLMs' potential in Text-to-SQL, as well as the advantages and disadvantages of the supervised fine-tuning. Additionally, towards an efficient and economic LLM-based Text-to-SQL solution, we emphasize the token efficiency in prompt engineering and compare the prior studies under this metric. We hope that our work provides a deeper understanding of Text-to-SQL with LLMs, and inspires further investigations and broad applications.

PDF AbstractCode

| Task | Dataset | Model | Metric Name | Metric Value | Global Rank | Benchmark |

|---|---|---|---|---|---|---|

| Text-To-SQL | BIRD (BIg Bench for LaRge-scale Database Grounded Text-to-SQL Evaluation) | DAIL-SQL + GPT-4 | Execution Accuracy % (Test) | 57.41 | # 7 | |

| Execution Accuracy % (Dev) | 54.76 | # 7 | ||||

| Text-To-SQL | spider | DAIL-SQL + GPT-4 + Self-Consistency | Exact Match Accuracy (Dev) | 74.4 | # 5 | |

| Execution Accuracy (Dev) | 84.4 | # 1 | ||||

| Execution Accuracy (Test) | 86.6 | # 2 |