Time Masking: Leveraging Temporal Information in Spoken Dialogue Systems

In a spoken dialogue system, dialogue state tracker (DST) components track the state of the conversation by updating a distribution of values associated with each of the slots being tracked for the current user turn, using the interactions until then. Much of the previous work has relied on modeling the natural order of the conversation, using distance based offsets as an approximation of time. In this work, we hypothesize that leveraging the wall-clock temporal difference between turns is crucial for finer-grained control of dialogue scenarios. We develop a novel approach that applies a {\it time mask}, based on the wall-clock time difference, to the associated slot embeddings and empirically demonstrate that our proposed approach outperforms existing approaches that leverage distance offsets, on both an internal benchmark dataset as well as DSTC2.

PDF Abstract WS 2019 PDF WS 2019 AbstractDatasets

Results from the Paper

Ranked #6 on

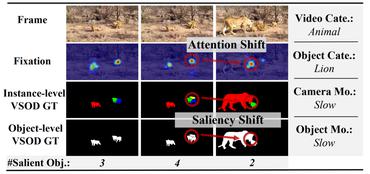

Video Salient Object Detection

on SegTrack v2

(using extra training data)

Ranked #6 on

Video Salient Object Detection

on SegTrack v2

(using extra training data)

SegTrack-v2

SegTrack-v2