Training-and-prompt-free General Painterly Harmonization Using Image-wise Attention Sharing

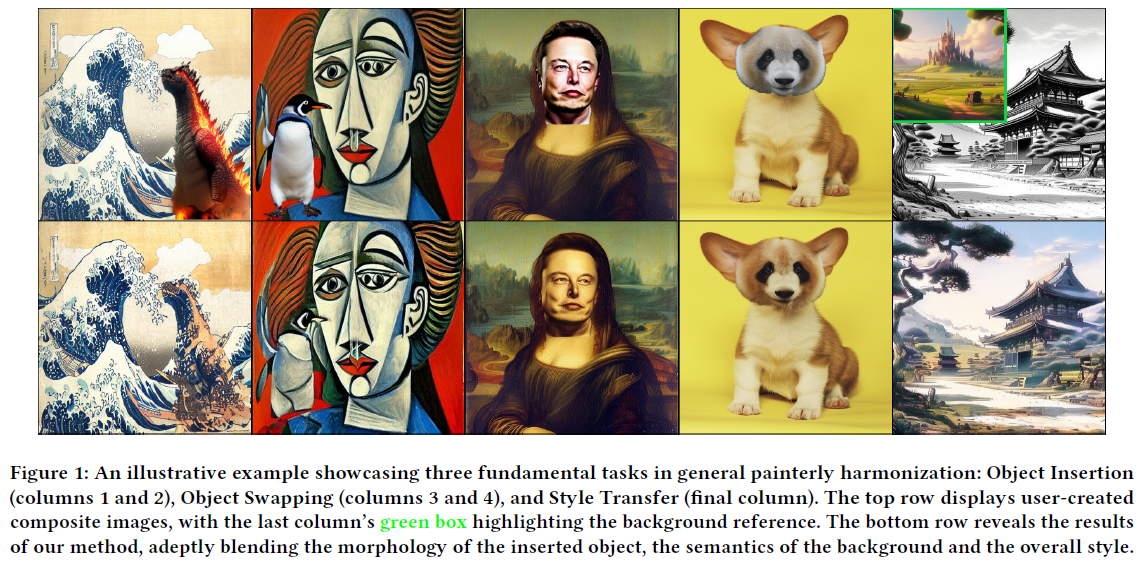

Painterly Image Harmonization aims at seamlessly blending disparate visual elements within a single coherent image. However, previous approaches often encounter significant limitations due to training data constraints, the need for time-consuming fine-tuning, or reliance on additional prompts. To surmount these hurdles, we design a Training-and-prompt-Free General Painterly Harmonization method using image-wise attention sharing (TF-GPH), which integrates a novel "share-attention module". This module redefines the traditional self-attention mechanism by allowing for comprehensive image-wise attention, facilitating the use of a state-of-the-art pretrained latent diffusion model without the typical training data limitations. Additionally, we further introduce "similarity reweighting" mechanism enhances performance by effectively harnessing cross-image information, surpassing the capabilities of fine-tuning or prompt-based approaches. At last, we recognize the deficiencies in existing benchmarks and propose the "General Painterly Harmonization Benchmark", which employs range-based evaluation metrics to more accurately reflect real-world application. Extensive experiments demonstrate the superior efficacy of our method across various benchmarks. The code and web demo are available at https://github.com/BlueDyee/TF-GPH.

PDF Abstract

MS COCO

MS COCO