Uni3D: Exploring Unified 3D Representation at Scale

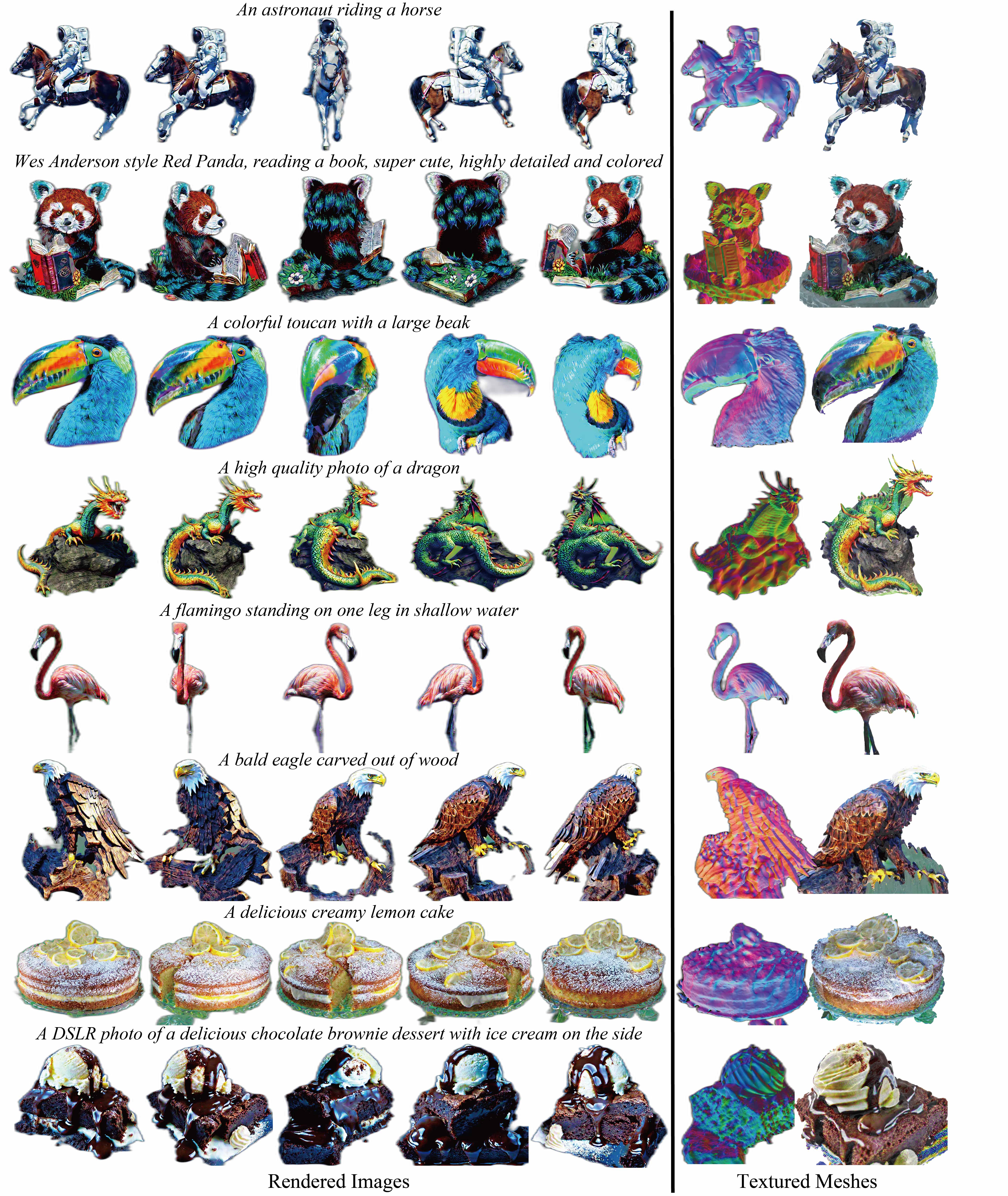

Scaling up representations for images or text has been extensively investigated in the past few years and has led to revolutions in learning vision and language. However, scalable representation for 3D objects and scenes is relatively unexplored. In this work, we present Uni3D, a 3D foundation model to explore the unified 3D representation at scale. Uni3D uses a 2D initialized ViT end-to-end pretrained to align the 3D point cloud features with the image-text aligned features. Via the simple architecture and pretext task, Uni3D can leverage abundant 2D pretrained models as initialization and image-text aligned models as the target, unlocking the great potential of 2D models and scaling-up strategies to the 3D world. We efficiently scale up Uni3D to one billion parameters, and set new records on a broad range of 3D tasks, such as zero-shot classification, few-shot classification, open-world understanding and part segmentation. We show that the strong Uni3D representation also enables applications such as 3D painting and retrieval in the wild. We believe that Uni3D provides a new direction for exploring both scaling up and efficiency of the representation in 3D domain.

PDF AbstractCode

Datasets

Results from the Paper

Ranked #1 on

Zero-shot 3D classification

on Objaverse LVIS

(using extra training data)

Ranked #1 on

Zero-shot 3D classification

on Objaverse LVIS

(using extra training data)

ShapeNet

ShapeNet

ModelNet

ModelNet

ScanNet

ScanNet

ScanObjectNN

ScanObjectNN

Objaverse

Objaverse