Visual Compositional Learning for Human-Object Interaction Detection

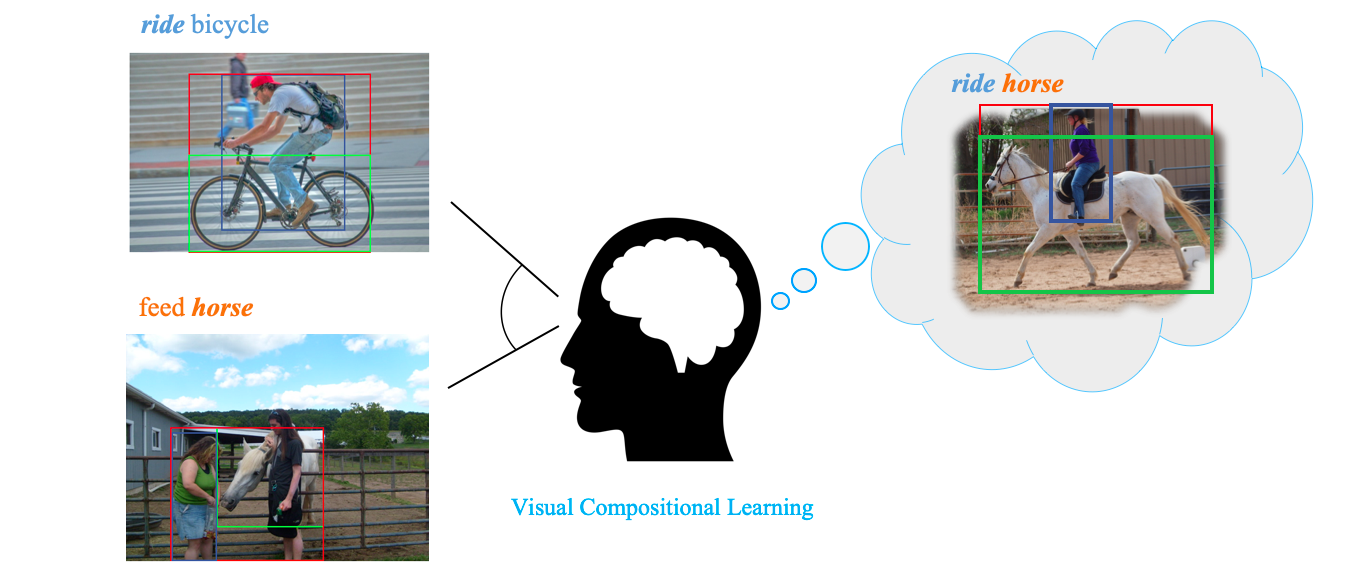

Human-Object interaction (HOI) detection aims to localize and infer relationships between human and objects in an image. It is challenging because an enormous number of possible combinations of objects and verbs types forms a long-tail distribution. We devise a deep Visual Compositional Learning (VCL) framework, which is a simple yet efficient framework to effectively address this problem. VCL first decomposes an HOI representation into object and verb specific features, and then composes new interaction samples in the feature space via stitching the decomposed features. The integration of decomposition and composition enables VCL to share object and verb features among different HOI samples and images, and to generate new interaction samples and new types of HOI, and thus largely alleviates the long-tail distribution problem and benefits low-shot or zero-shot HOI detection. Extensive experiments demonstrate that the proposed VCL can effectively improve the generalization of HOI detection on HICO-DET and V-COCO and outperforms the recent state-of-the-art methods on HICO-DET. Code is available at https://github.com/zhihou7/VCL.

PDF Abstract ECCV 2020 PDF ECCV 2020 Abstract

MS COCO

MS COCO

HICO-DET

HICO-DET

V-COCO

V-COCO