Weakly Supervised Semantic Segmentation by Pixel-to-Prototype Contrast

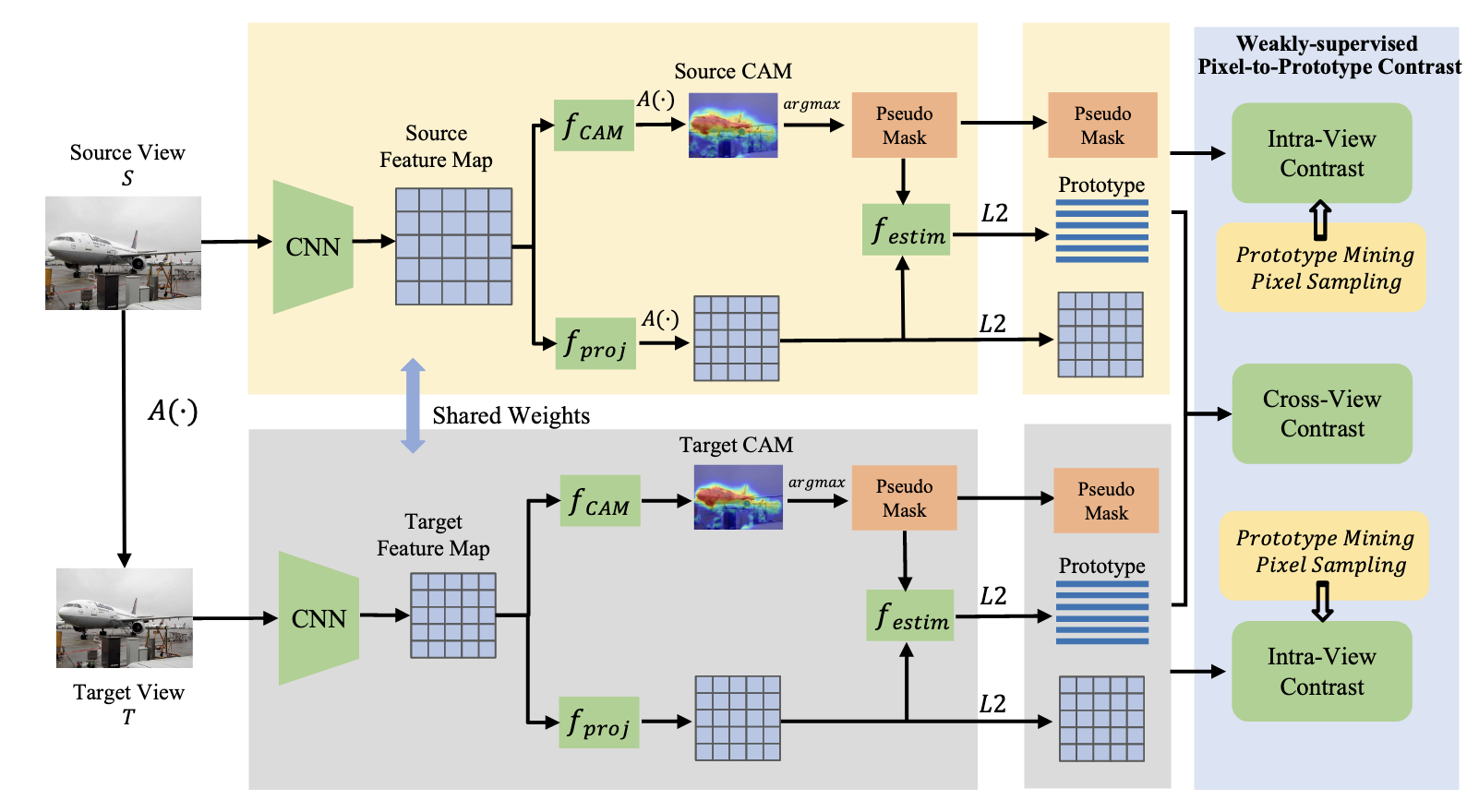

Though image-level weakly supervised semantic segmentation (WSSS) has achieved great progress with Class Activation Maps (CAMs) as the cornerstone, the large supervision gap between classification and segmentation still hampers the model to generate more complete and precise pseudo masks for segmentation. In this study, we propose weakly-supervised pixel-to-prototype contrast that can provide pixel-level supervisory signals to narrow the gap. Guided by two intuitive priors, our method is executed across different views and within per single view of an image, aiming to impose cross-view feature semantic consistency regularization and facilitate intra(inter)-class compactness(dispersion) of the feature space. Our method can be seamlessly incorporated into existing WSSS models without any changes to the base networks and does not incur any extra inference burden. Extensive experiments manifest that our method consistently improves two strong baselines by large margins, demonstrating the effectiveness. Specifically, built on top of SEAM, we improve the initial seed mIoU on PASCAL VOC 2012 from 55.4% to 61.5%. Moreover, armed with our method, we increase the segmentation mIoU of EPS from 70.8% to 73.6%, achieving new state-of-the-art.

PDF Abstract CVPR 2022 PDF CVPR 2022 AbstractCode

Datasets

Results from the Paper

Ranked #14 on

Weakly-Supervised Semantic Segmentation

on PASCAL VOC 2012 test

(using extra training data)

Ranked #14 on

Weakly-Supervised Semantic Segmentation

on PASCAL VOC 2012 test

(using extra training data)

PASCAL VOC

PASCAL VOC

VOC 2012

VOC 2012